Multiscale Autoencoder with Structural-Functional Attention Network for Alzheimer's Disease Prediction

Paper and Code

Aug 09, 2022

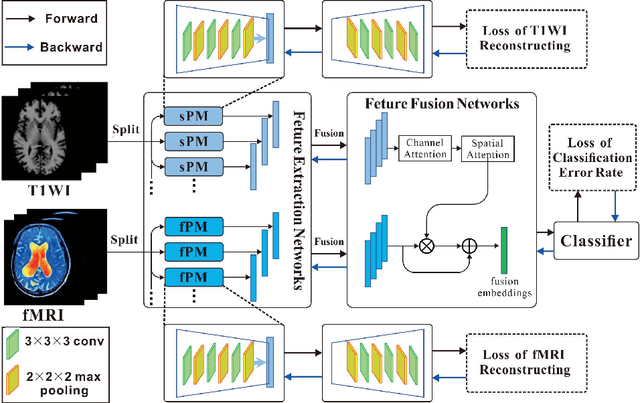

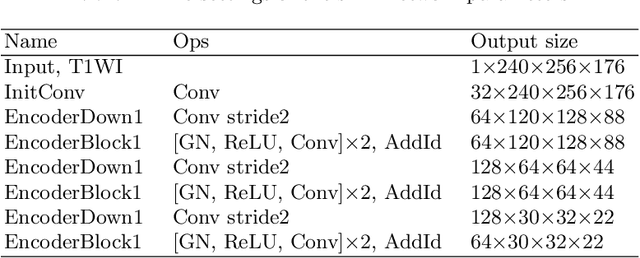

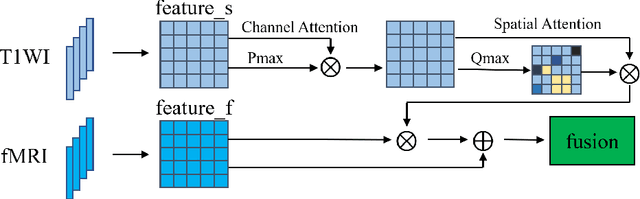

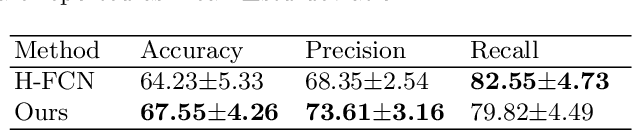

The application of machine learning algorithms to the diagnosis and analysis of Alzheimer's disease (AD) from multimodal neuroimaging data is a current research hotspot. It remains a formidable challenge to learn brain region information and discover disease mechanisms from various magnetic resonance images (MRI). In this paper, we propose a simple but highly efficient end-to-end model, a multiscale autoencoder with structural-functional attention network (MASAN) to extract disease-related representations using T1-weighted Imaging (T1WI) and functional MRI (fMRI). Based on the attention mechanism, our model effectively learns the fused features of brain structure and function and finally is trained for the classification of Alzheimer's disease. Compared with the fully convolutional network, the proposed method has further improvement in both accuracy and precision, leading by 3% to 5%. By visualizing the extracted embedding, the empirical results show that there are higher weights on putative AD-related brain regions (such as the hippocampus, amygdala, etc.), and these regions are much more informative in anatomical studies. Conversely, the cerebellum, parietal lobe, thalamus, brain stem, and ventral diencephalon have little predictive contribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge