Multiple Kernel-Based Online Federated Learning

Paper and Code

Feb 22, 2021

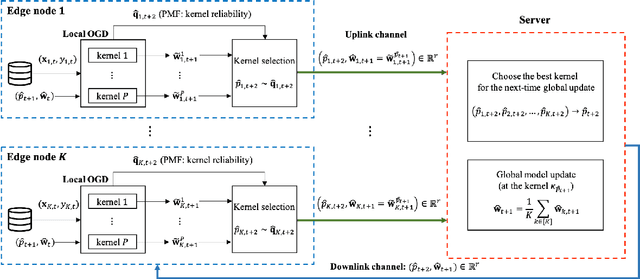

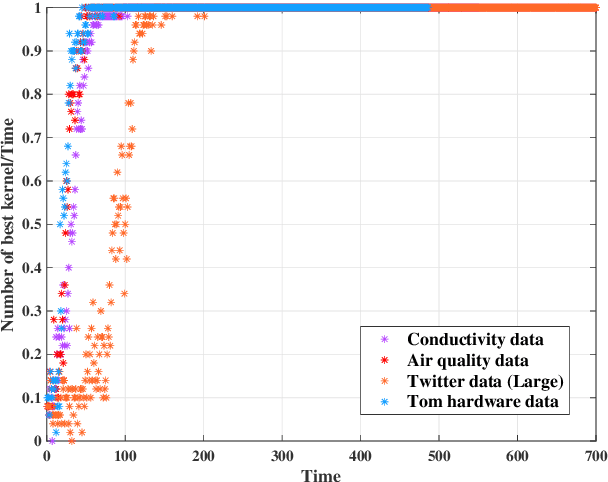

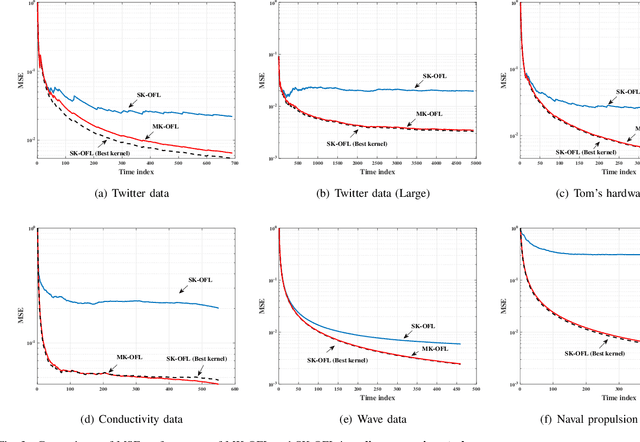

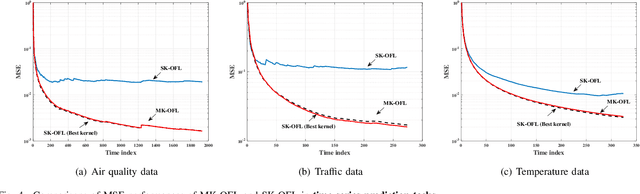

Online federated learning (OFL) becomes an emerging learning framework, in which edge nodes perform online learning with continuous streaming local data and a server constructs a global model from the aggregated local models. Online multiple kernel learning (OMKL), using a preselected set of P kernels, can be a good candidate for OFL framework as it has provided an outstanding performance with a low-complexity and scalability. Yet, an naive extension of OMKL into OFL framework suffers from a heavy communication overhead that grows linearly with P. In this paper, we propose a novel multiple kernel-based OFL (MK-OFL) as a non-trivial extension of OMKL, which yields the same performance of the naive extension with 1/P communication overhead reduction. We theoretically prove that MK-OFL achieves the optimal sublinear regret bound when compared with the best function in hindsight. Finally, we provide the numerical tests of our approach on real-world datasets, which suggests its practicality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge