Multimode Fiber Projector

Paper and Code

Jun 29, 2019

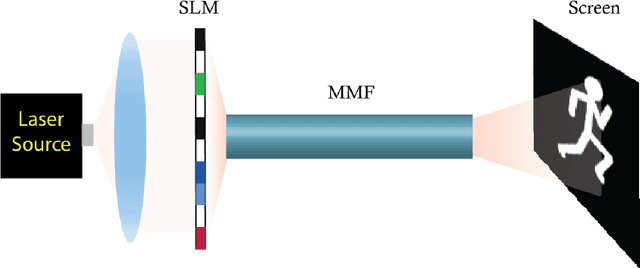

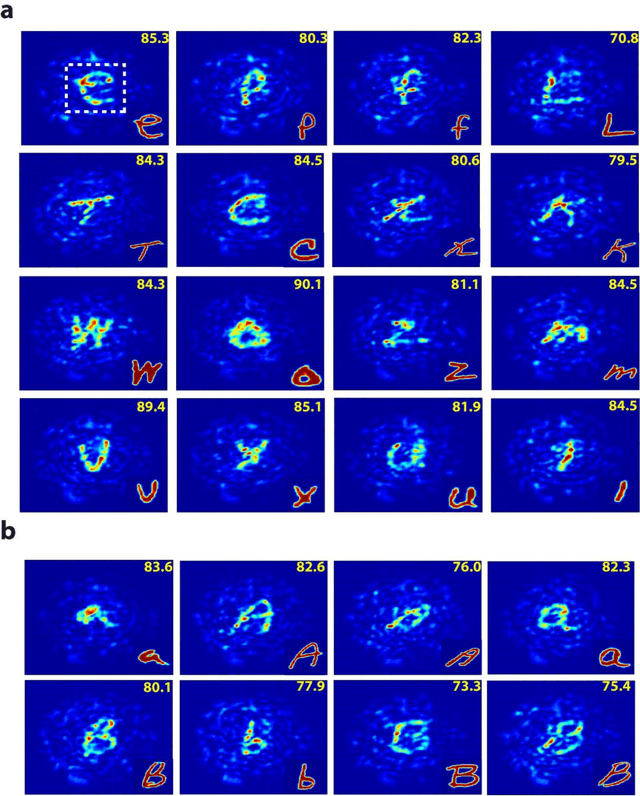

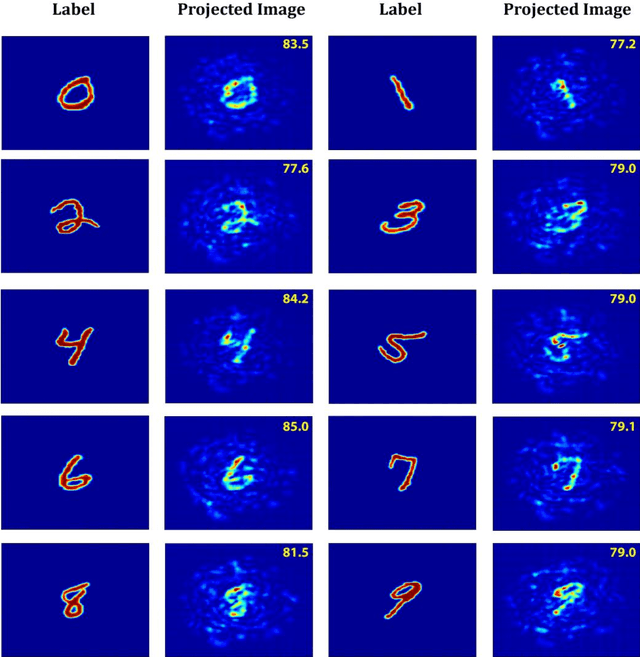

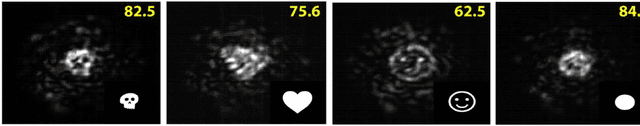

Direct image transmission in multimode fibers (MMFs) is hampered by modal scrambling inside the fiber due to the multimodal nature of the medium. To undo modal scrambling, approaches that either use interferometry to construct a transmission matrix or iterative feedback based wavefront shaping to form an output spot on the camera have been proposed and implemented successfully. The former method entails measuring the complex output field (phase and amplitude) using interferometric systems. The latter, requires scanning the spot by phase conjugation or iterative techniques to form arbitrary shapes, increasing the computational cost. In this work, we show that by using neural networks, we are able to project arbitrary shapes through the MMF without measuring the output phase. Specifically, we demonstrate that our projector network is able to produce input patterns that, when sent through the fiber, form arbitrary shapes on the camera with fidelities (correlation) as high as ~90%. We believe this approach opens up new paths towards imaging and pattern projection for a plethora of applications ranging from tissue imaging, surgical ablations to virtual/augmented reality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge