Multimodal Interaction with Multiple Co-located Drones in Search and Rescue Missions

Paper and Code

May 24, 2016

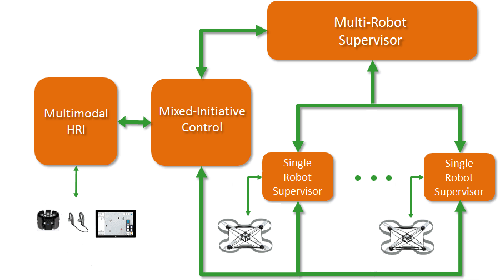

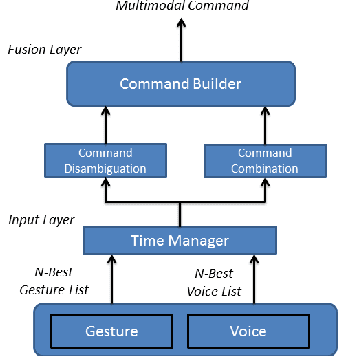

We present a multimodal interaction framework suitable for a human rescuer that operates in proximity with a set of co-located drones during search missions. This work is framed in the context of the SHERPA project whose goal is to develop a mixed ground and aerial robotic platform to support search and rescue activities in a real-world alpine scenario. Differently from typical human-drone interaction settings, here the operator is not fully dedicated to the drones, but involved in search and rescue tasks, hence only able to provide sparse, incomplete, although high-value, instructions to the robots. This operative scenario requires a human-interaction framework that supports multimodal communication along with an effective and natural mixed-initiative interaction between the human and the robots. In this work, we illustrate the domain and the proposed multimodal interaction framework discussing the system at work in a simulated case study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge