Multimodal Fusion Strategies for Mapping Biophysical Landscape Features

Paper and Code

Oct 07, 2024

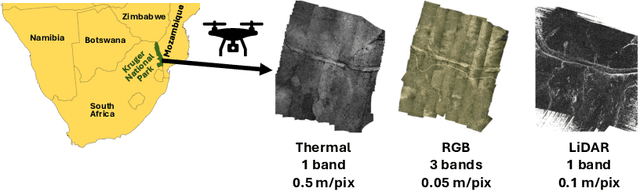

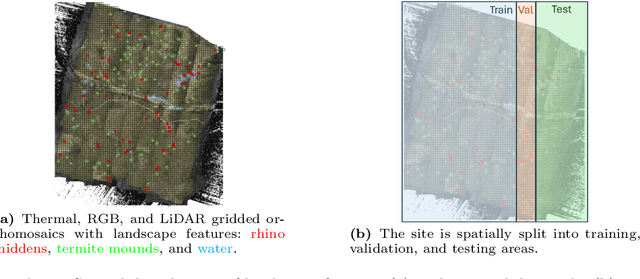

Multimodal aerial data are used to monitor natural systems, and machine learning can significantly accelerate the classification of landscape features within such imagery to benefit ecology and conservation. It remains under-explored, however, how these multiple modalities ought to be fused in a deep learning model. As a step towards filling this gap, we study three strategies (Early fusion, Late fusion, and Mixture of Experts) for fusing thermal, RGB, and LiDAR imagery using a dataset of spatially-aligned orthomosaics in these three modalities. In particular, we aim to map three ecologically-relevant biophysical landscape features in African savanna ecosystems: rhino middens, termite mounds, and water. The three fusion strategies differ in whether the modalities are fused early or late, and if late, whether the model learns fixed weights per modality for each class or generates weights for each class adaptively, based on the input. Overall, the three methods have similar macro-averaged performance with Late fusion achieving an AUC of 0.698, but their per-class performance varies strongly, with Early fusion achieving the best recall for middens and water and Mixture of Experts achieving the best recall for mounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge