Multi-view PointNet for 3D Scene Understanding

Paper and Code

Sep 30, 2019

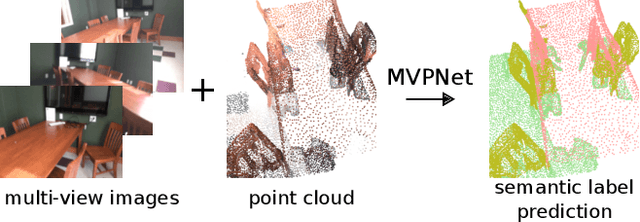

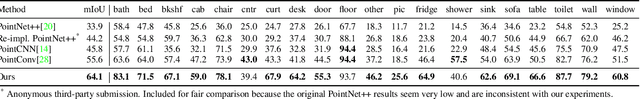

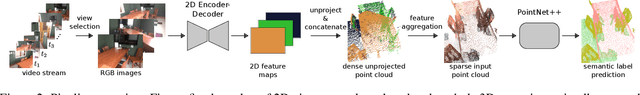

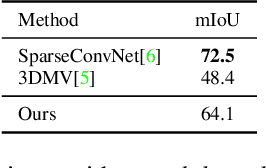

Fusion of 2D images and 3D point clouds is important because information from dense images can enhance sparse point clouds. However, fusion is challenging because 2D and 3D data live in different spaces. In this work, we propose MVPNet (Multi-View PointNet), where we aggregate 2D multi-view image features into 3D point clouds, and then use a point based network to fuse the features in 3D canonical space to predict 3D semantic labels. To this end, we introduce view selection along with a 2D-3D feature aggregation module. Extensive experiments show the benefit of leveraging features from dense images and reveal superior robustness to varying point cloud density compared to 3D-only methods. On the ScanNetV2 benchmark, our MVPNet significantly outperforms prior point cloud based approaches on the task of 3D Semantic Segmentation. It is much faster to train than the large networks of the sparse voxel approach. We provide solid ablation studies to ease the future design of 2D-3D fusion methods and their extension to other tasks, as we showcase for 3D instance segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge