Multi-Type Activity Recognition in Robot-Centric Scenarios

Paper and Code

Apr 12, 2016

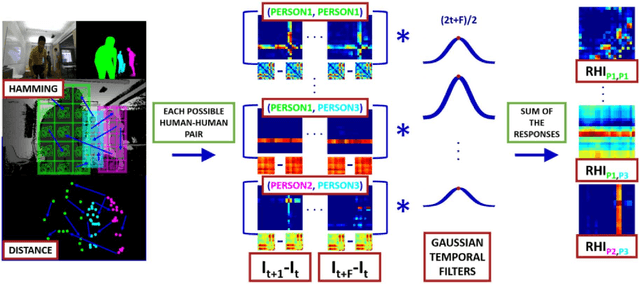

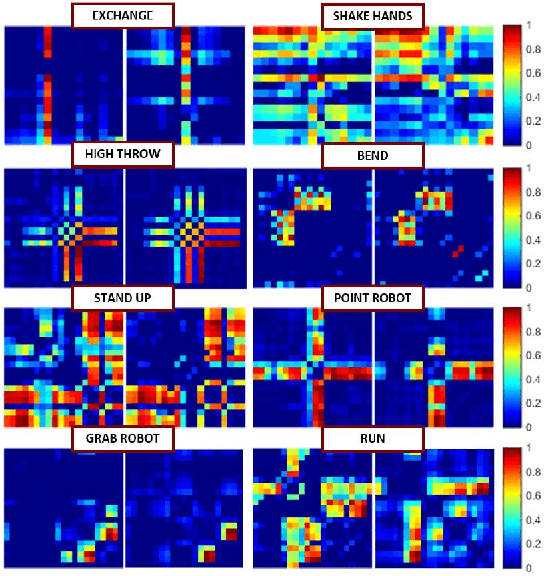

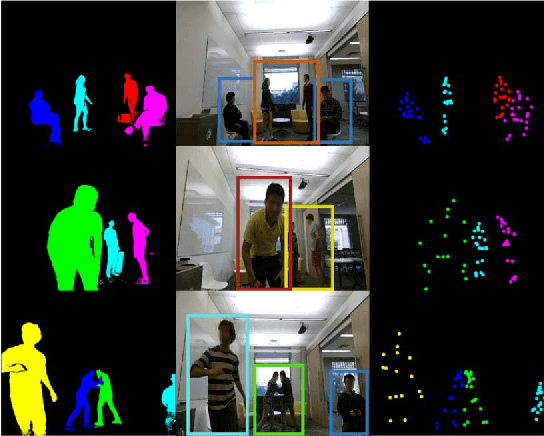

Activity recognition is very useful in scenarios where robots interact with, monitor or assist humans. In the past years many types of activities -- single actions, two persons interactions or ego-centric activities, to name a few -- have been analyzed. Whereas traditional methods treat such types of activities separately, an autonomous robot should be able to detect and recognize multiple types of activities to effectively fulfill its tasks. We propose a method that is intrinsically able to detect and recognize activities of different types that happen in sequence or concurrently. We present a new unified descriptor, called Relation History Image (RHI), which can be extracted from all the activity types we are interested in. We then formulate an optimization procedure to detect and recognize activities of different types. We apply our approach to a new dataset recorded from a robot-centric perspective and systematically evaluate its quality compared to multiple baselines. Finally, we show the efficacy of the RHI descriptor on publicly available datasets performing extensive comparisons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge