Multi-task twin support vector machine with Universum data

Paper and Code

Jun 22, 2022

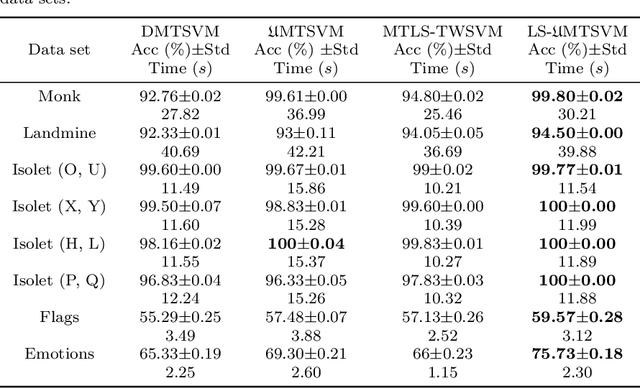

Multi-task learning (MTL) has emerged as a promising topic of machine learning in recent years, aiming to enhance the performance of numerous related learning tasks by exploiting beneficial information. During the training phase, most of the existing multi-task learning models concentrate entirely on the target task data and ignore the non-target task data contained in the target tasks. To address this issue, Universum data, that do not correspond to any class of a classification problem, may be used as prior knowledge in the training model. This study looks at the challenge of multi-task learning using Universum data to employ non-target task data, which leads to better performance. It proposes a multi-task twin support vector machine with Universum data (UMTSVM) and provides two approaches to its solution. The first approach takes into account the dual formulation of UMTSVM and tries to solve a quadratic programming problem. The second approach formulates a least-squares version of UMTSVM and refers to it as LS-UMTSVM to further increase the generalization performance. The solution of the two primal problems in LS-UMTSVM is simplified to solving just two systems of linear equations, resulting in an incredibly simple and quick approach. Numerical experiments on several popular multi-task data sets and medical data sets demonstrate the efficiency of the proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge