Multi-Task Transformer with uncertainty modelling for Face Based Affective Computing

Paper and Code

Aug 06, 2022

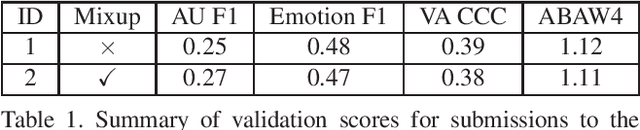

Face based affective computing consists in detecting emotions from face images. It is useful to unlock better automatic comprehension of human behaviours and could pave the way toward improved human-machines interactions. However it comes with the challenging task of designing a computational representation of emotions. So far, emotions have been represented either continuously in the 2D Valence/Arousal space or in a discrete manner with Ekman's 7 basic emotions. Alternatively, Ekman's Facial Action Unit (AU) system have also been used to caracterize emotions using a codebook of unitary muscular activations. ABAW3 and ABAW4 Multi-Task Challenges are the first work to provide a large scale database annotated with those three types of labels. In this paper we present a transformer based multi-task method for jointly learning to predict valence arousal, action units and basic emotions. From an architectural standpoint our method uses a taskwise token approach to efficiently model the similarities between the tasks. From a learning point of view we use an uncertainty weighted loss for modelling the difference of stochasticity between the three tasks annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge