Multi-Task Learning with Incomplete Data for Healthcare

Paper and Code

Jul 06, 2018

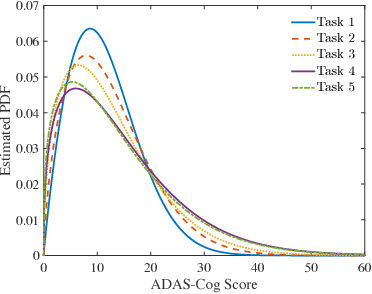

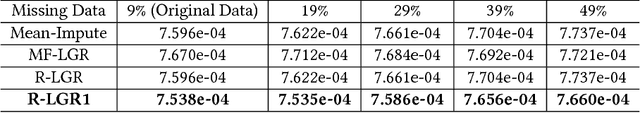

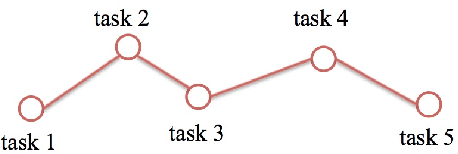

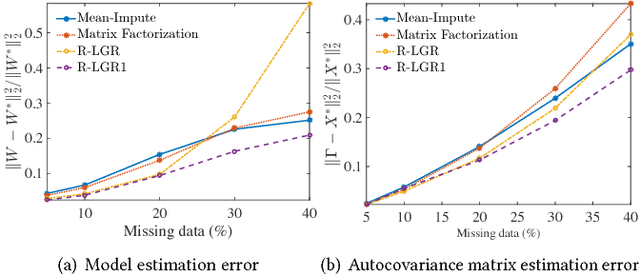

Multi-task learning is a type of transfer learning that trains multiple tasks simultaneously and leverages the shared information between related tasks to improve the generalization performance. However, missing features in the input matrix is a much more difficult problem which needs to be carefully addressed. Removing records with missing values can significantly reduce the sample size, which is impractical for datasets with large percentage of missing values. Popular imputation methods often distort the covariance structure of the data, which causes inaccurate inference. In this paper we propose using plug-in covariance matrix estimators to tackle the challenge of missing features. Specifically, we analyze the plug-in estimators under the framework of robust multi-task learning with LASSO and graph regularization, which captures the relatedness between tasks via graph regularization. We use the Alzheimer's disease progression dataset as an example to show how the proposed framework is effective for prediction and model estimation when missing data is present.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge