Multi-Robot Formation Control Using Reinforcement Learning

Paper and Code

Jan 13, 2020

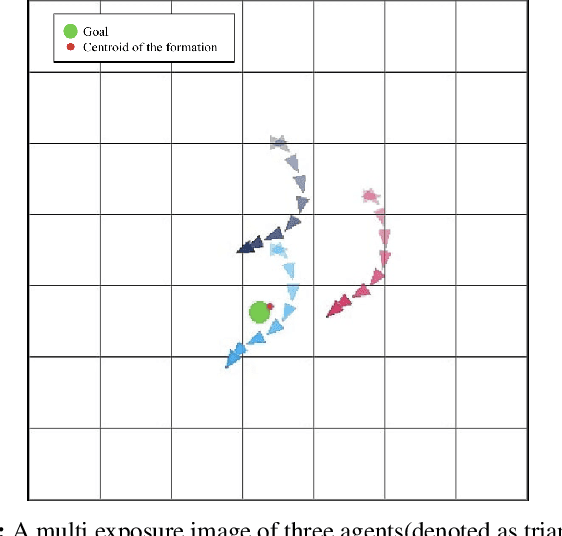

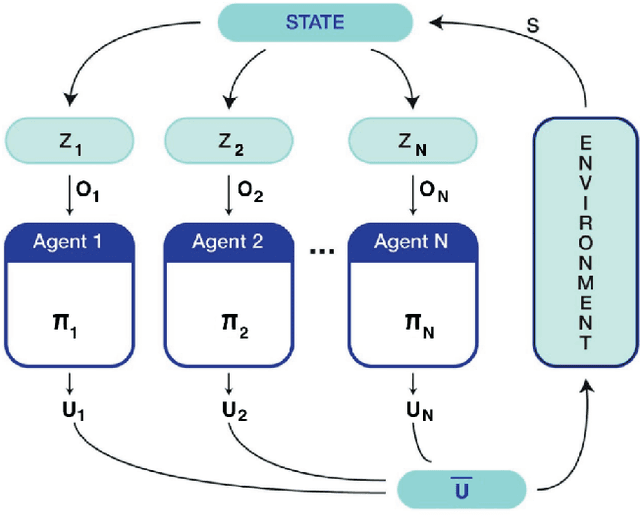

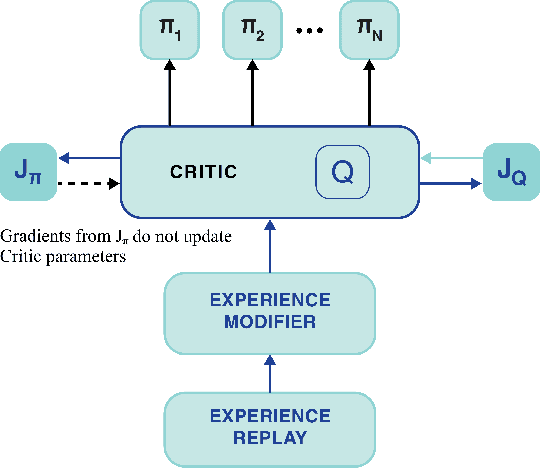

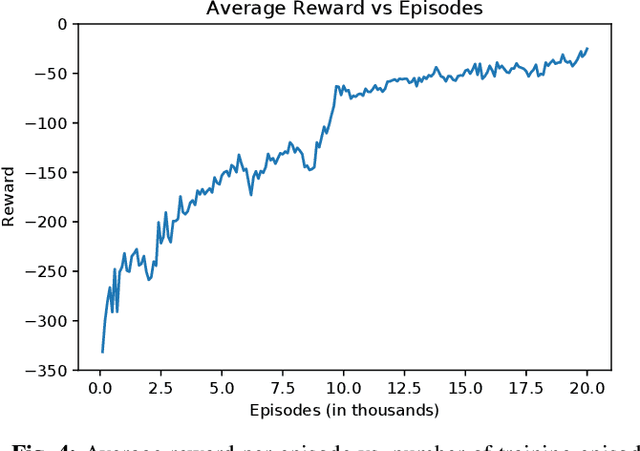

In this paper, we present a machine learning approach to move a group of robots in a formation. We model the problem as a multi-agent reinforcement learning problem. Our aim is to design a control policy for maintaining a desired formation among a number of agents (robots) while moving towards a desired goal. This is achieved by training our agents to track two agents of the group and maintain the formation with respect to those agents. We consider all agents to be homogeneous and model them as unicycle [1]. In contrast to the leader-follower approach, where each agent has an independent goal, our approach aims to train the agents to be cooperative and work towards the common goal. Our motivation to use this method is to make a fully decentralized multi-agent formation system and scalable for a number of agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge