Multi-Instance Training for Question Answering Across Table and Linked Text

Paper and Code

Dec 14, 2021

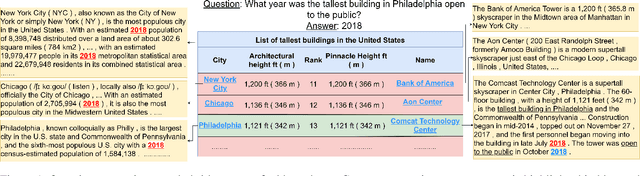

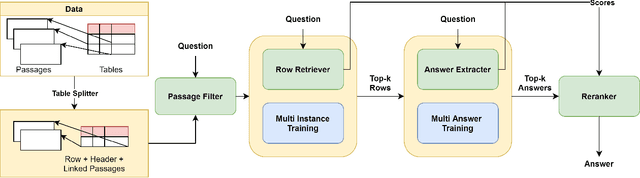

Answering natural language questions using information from tables (TableQA) is of considerable recent interest. In many applications, tables occur not in isolation, but embedded in, or linked to unstructured text. Often, a question is best answered by matching its parts to either table cell contents or unstructured text spans, and extracting answers from either source. This leads to a new space of TextTableQA problems that was introduced by the HybridQA dataset. Existing adaptations of table representation to transformer-based reading comprehension (RC) architectures fail to tackle the diverse modalities of the two representations through a single system. Training such systems is further challenged by the need for distant supervision. To reduce cognitive burden, training instances usually include just the question and answer, the latter matching multiple table rows and text passages. This leads to a noisy multi-instance training regime involving not only rows of the table, but also spans of linked text. We respond to these challenges by proposing MITQA, a new TextTableQA system that explicitly models the different but closely-related probability spaces of table row selection and text span selection. Our experiments indicate the superiority of our approach compared to recent baselines. The proposed method is currently at the top of the HybridQA leaderboard with a held out test set, achieving 21 % absolute improvement on both EM and F1 scores over previous published results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge