MSP: Multi-Stage Prompting for Making Pre-trained Language Models Better Translators

Paper and Code

Oct 13, 2021

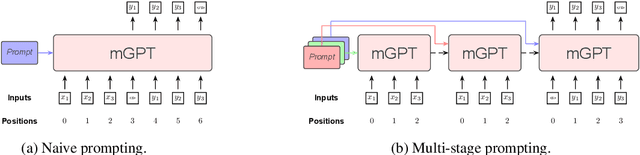

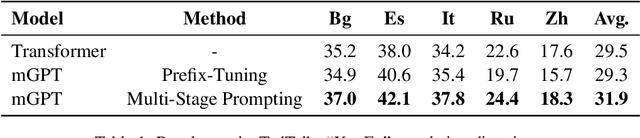

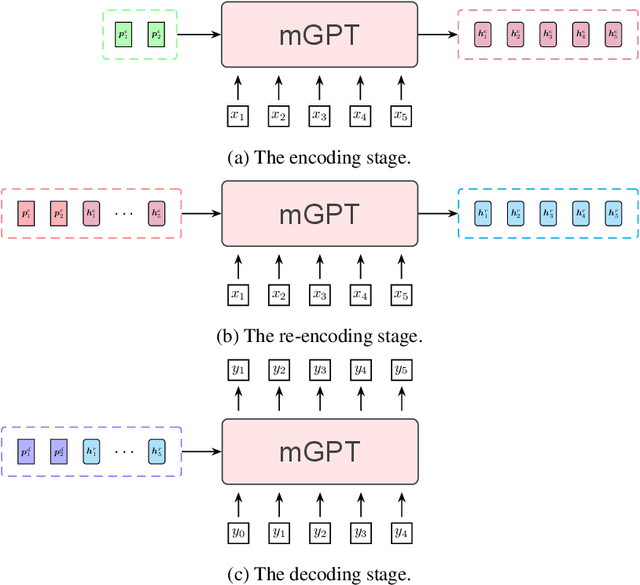

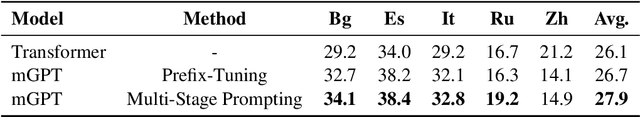

Pre-trained language models have recently been shown to be able to perform translation without finetuning via prompting. Inspired by these findings, we study improving the performance of pre-trained language models on translation tasks, where training neural machine translation models is the current de facto approach. We present Multi-Stage Prompting, a simple and lightweight approach for better adapting pre-trained language models to translation tasks. To make pre-trained language models better translators, we divide the translation process via pre-trained language models into three separate stages: the encoding stage, the re-encoding stage, and the decoding stage. During each stage, we independently apply different continuous prompts for allowing pre-trained language models better adapting to translation tasks. We conduct extensive experiments on low-, medium-, and high-resource translation tasks. Experiments show that our method can significantly improve the translation performance of pre-trained language models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge