MS-RNN: A Flexible Multi-Scale Framework for Spatiotemporal Predictive Learning

Paper and Code

Jun 07, 2022

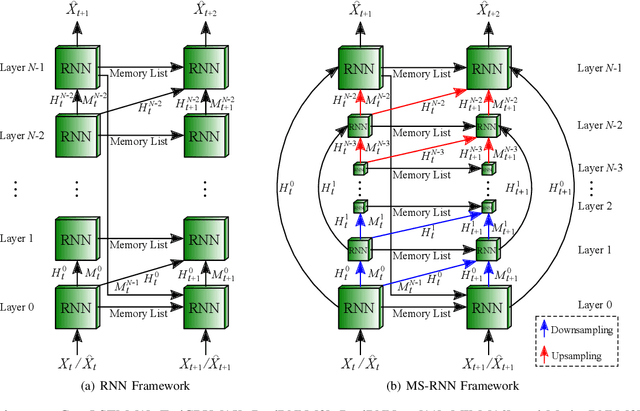

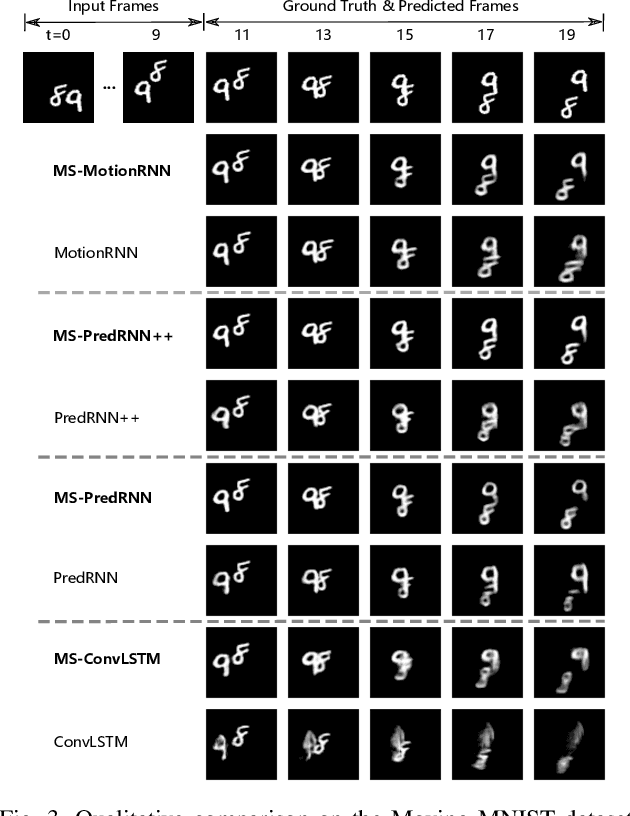

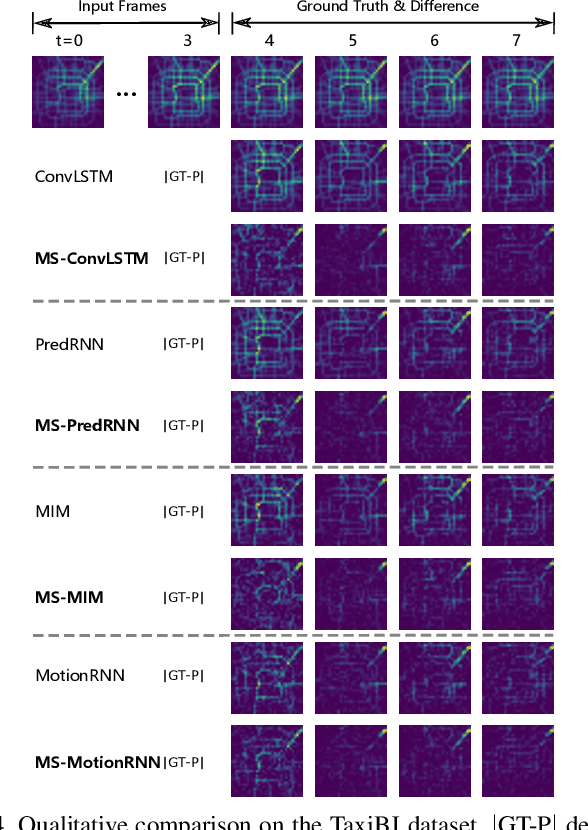

Spatiotemporal predictive learning is to predict future frames changes through historical prior knowledge. Previous work improves prediction performance by making the network wider and deeper, but this also brings huge memory overhead, which seriously hinders the development and application of the technology. Scale is another dimension to improve model performance in common computer vision task, which can decrease the computing requirements and better sense of context. Such an important improvement point has not been considered and explored by recent RNN models. In this paper, learning from the benefit of multi-scale, we propose a general framework named Multi-Scale RNN (MS-RNN) to boost recent RNN models. We verify the MS-RNN framework by exhaustive experiments on 4 different datasets (Moving MNIST, KTH, TaxiBJ, and HKO-7) and multiple popular RNN models (ConvLSTM, TrajGRU, PredRNN, PredRNN++, MIM, and MotionRNN). The results show the efficiency that the RNN models incorporating our framework have much lower memory cost but better performance than before. Our code is released at \url{https://github.com/mazhf/MS-RNN}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge