MPLR: a novel model for multi-target learning of logical rules for knowledge graph reasoning

Paper and Code

Dec 12, 2021

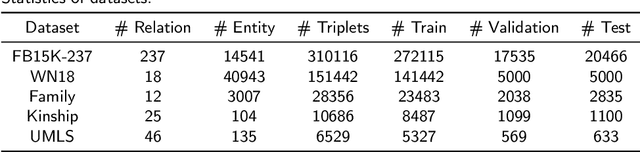

Large-scale knowledge graphs (KGs) provide structured representations of human knowledge. However, as it is impossible to contain all knowledge, KGs are usually incomplete. Reasoning based on existing facts paves a way to discover missing facts. In this paper, we study the problem of learning logic rules for reasoning on knowledge graphs for completing missing factual triplets. Learning logic rules equips a model with strong interpretability as well as the ability to generalize to similar tasks. We propose a model called MPLR that improves the existing models to fully use training data and multi-target scenarios are considered. In addition, considering the deficiency in evaluating the performance of models and the quality of mined rules, we further propose two novel indicators to help with the problem. Experimental results empirically demonstrate that our MPLR model outperforms state-of-the-art methods on five benchmark datasets. The results also prove the effectiveness of the indicators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge