Monte Carlo Rollout Policy for Recommendation Systems with Dynamic User Behavior

Paper and Code

Feb 08, 2021

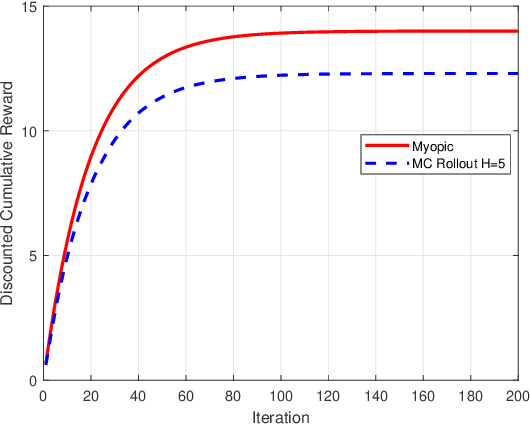

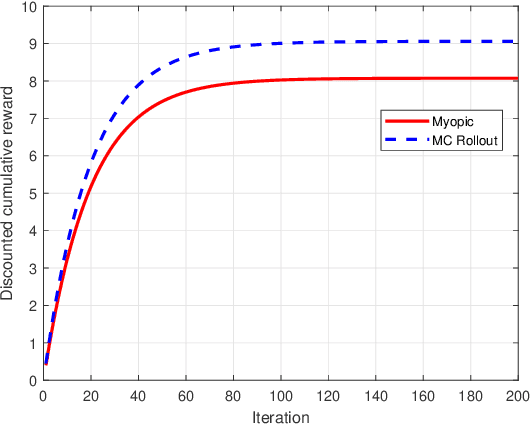

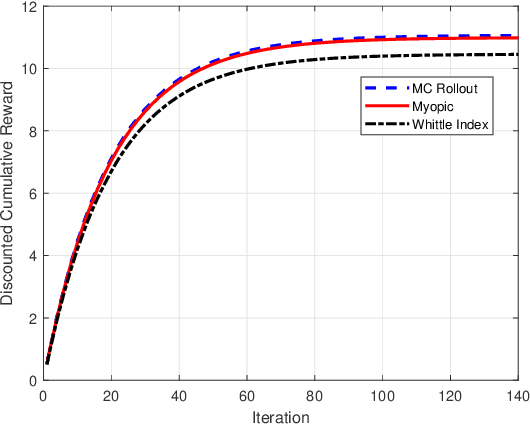

We model online recommendation systems using the hidden Markov multi-state restless multi-armed bandit problem. To solve this we present Monte Carlo rollout policy. We illustrate numerically that Monte Carlo rollout policy performs better than myopic policy for arbitrary transition dynamics with no specific structure. But, when some structure is imposed on the transition dynamics, myopic policy performs better than Monte Carlo rollout policy.

* 5 Pages, 4 figures, conference COMSNETS 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge