Modularized Networks for Few-shot Hateful Meme Detection

Paper and Code

Feb 19, 2024

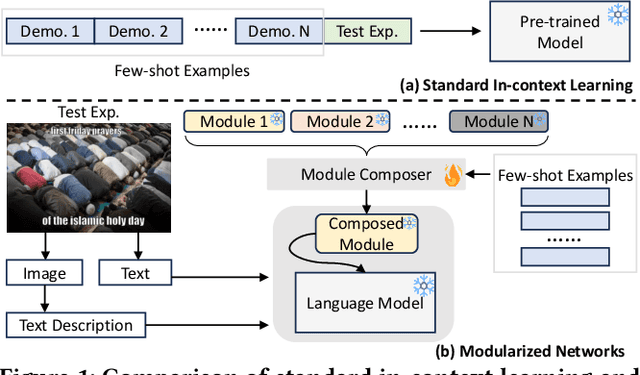

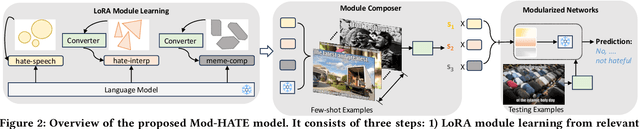

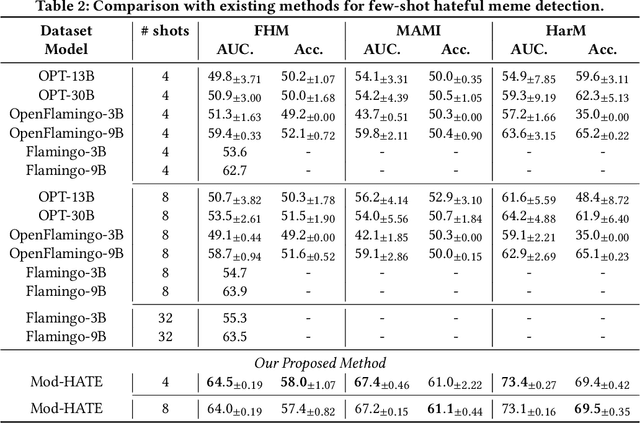

In this paper, we address the challenge of detecting hateful memes in the low-resource setting where only a few labeled examples are available. Our approach leverages the compositionality of Low-rank adaptation (LoRA), a widely used parameter-efficient tuning technique. We commence by fine-tuning large language models (LLMs) with LoRA on selected tasks pertinent to hateful meme detection, thereby generating a suite of LoRA modules. These modules are capable of essential reasoning skills for hateful meme detection. We then use the few available annotated samples to train a module composer, which assigns weights to the LoRA modules based on their relevance. The model's learnable parameters are directly proportional to the number of LoRA modules. This modularized network, underpinned by LLMs and augmented with LoRA modules, exhibits enhanced generalization in the context of hateful meme detection. Our evaluation spans three datasets designed for hateful meme detection in a few-shot learning context. The proposed method demonstrates superior performance to traditional in-context learning, which is also more computationally intensive during inference.We then use the few available annotated samples to train a module composer, which assigns weights to the LoRA modules based on their relevance. The model's learnable parameters are directly proportional to the number of LoRA modules. This modularized network, underpinned by LLMs and augmented with LoRA modules, exhibits enhanced generalization in the context of hateful meme detection. Our evaluation spans three datasets designed for hateful meme detection in a few-shot learning context. The proposed method demonstrates superior performance to traditional in-context learning, which is also more computationally intensive during inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge