Modelling nonlinear dependencies in the latent space of inverse scattering

Paper and Code

Mar 19, 2022

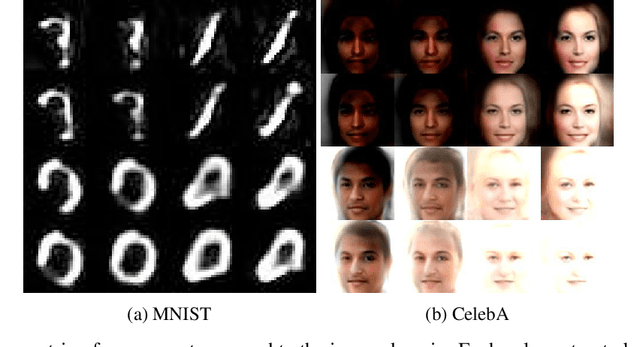

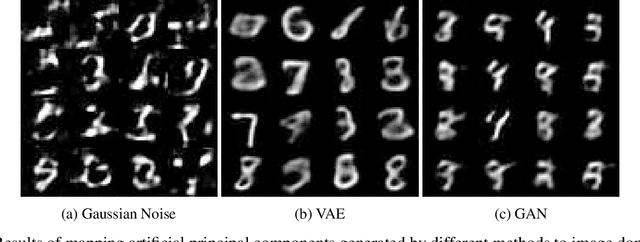

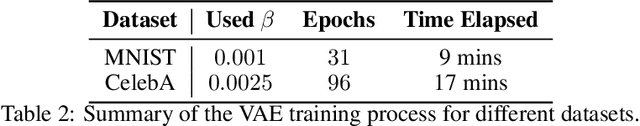

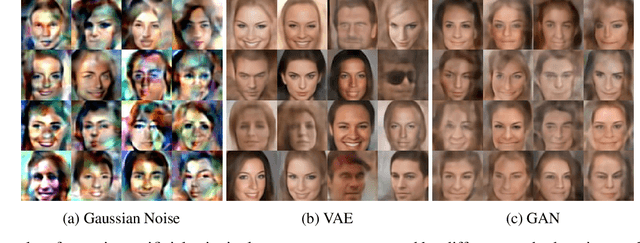

The problem of inverse scattering proposed by Angles and Mallat in 2018, concerns training a deep neural network to invert the scattering transform applied to an image. After such a network is trained, it can be used as a generative model given that we can sample from the distribution of principal components of scattering coefficients. For this purpose, Angles and Mallat simply use samples from independent Gaussians. However, as shown in this paper, the distribution of interest can actually be very far from normal and non-negligible dependencies might exist between different coefficients. This motivates using models for this distribution that allow for non-linear dependencies between variables. Within this paper, two such models are explored, namely a Variational AutoEncoder and a Generative Adversarial Network. We demonstrate the results obtained can be extremely realistic on some datasets and look better than those produced by Angles and Mallat. The conducted meta-analysis also shows a clear practical advantage of such constructed generative models in terms of the efficiency of their training process compared to existing generative models for images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge