Modeling sequential annotations for sequence labeling with crowds

Paper and Code

Sep 20, 2022

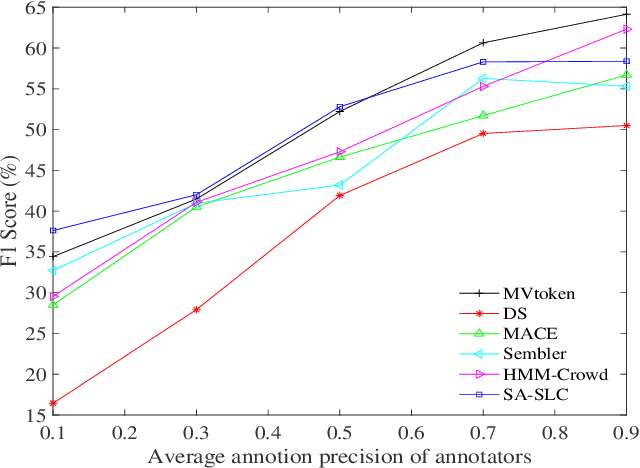

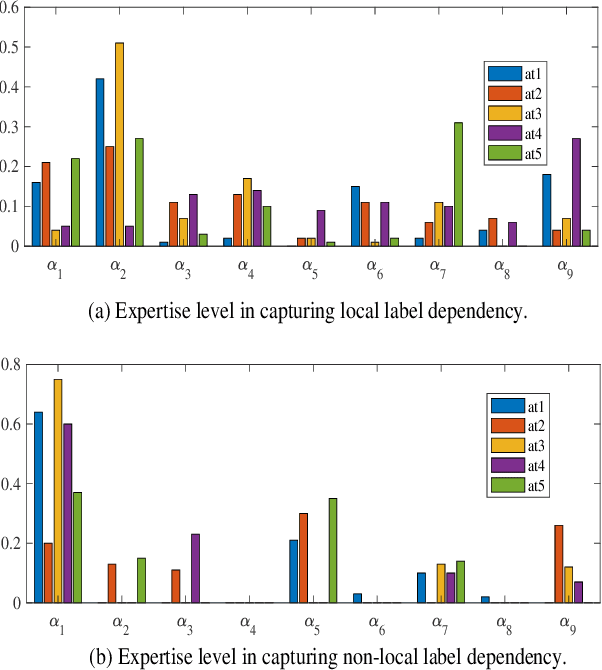

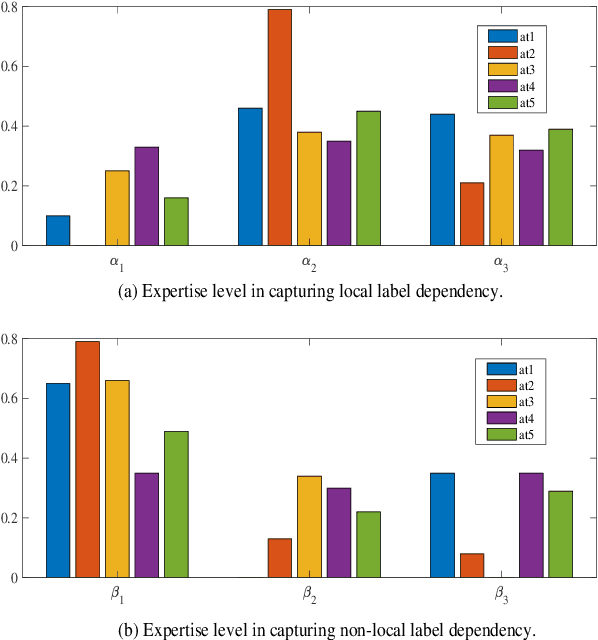

Crowd sequential annotations can be an efficient and cost-effective way to build large datasets for sequence labeling. Different from tagging independent instances, for crowd sequential annotations the quality of label sequence relies on the expertise level of annotators in capturing internal dependencies for each token in the sequence. In this paper, we propose Modeling sequential annotation for sequence labeling with crowds (SA-SLC). First, a conditional probabilistic model is developed to jointly model sequential data and annotators' expertise, in which categorical distribution is introduced to estimate the reliability of each annotator in capturing local and non-local label dependency for sequential annotation. To accelerate the marginalization of the proposed model, a valid label sequence inference (VLSE) method is proposed to derive the valid ground-truth label sequences from crowd sequential annotations. VLSE derives possible ground-truth labels from the token-wise level and further prunes sub-paths in the forward inference for label sequence decoding. VLSE reduces the number of candidate label sequences and improves the quality of possible ground-truth label sequences. The experimental results on several sequence labeling tasks of Natural Language Processing show the effectiveness of the proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge