Model-Agnostic Characterization of Fairness Trade-offs

Paper and Code

Apr 08, 2020

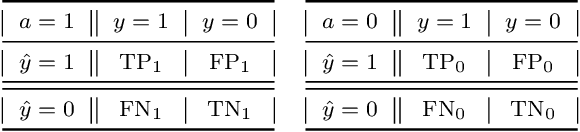

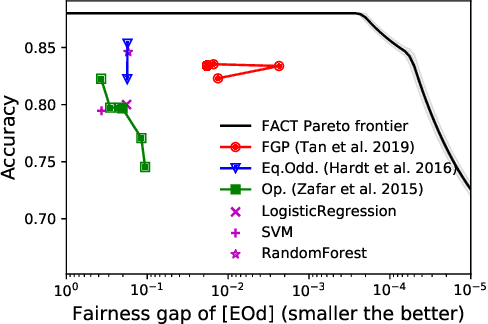

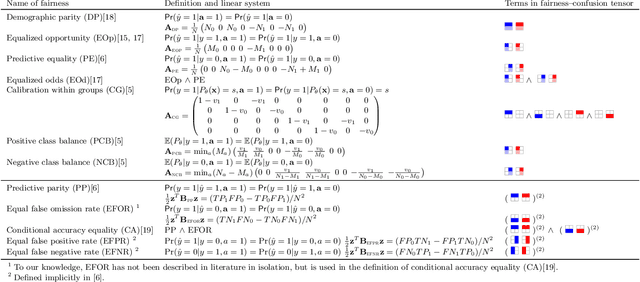

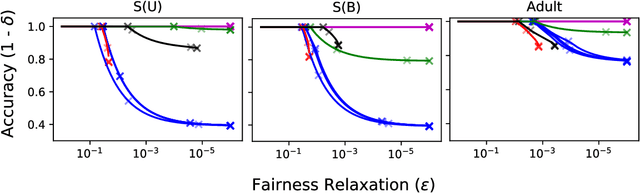

There exist several inherent trade-offs in designing a fair model, such as those between the model's predictive performance and fairness, or even among different notions of fairness. In practice, exploring these trade-offs requires significant human and computational resources. We propose a diagnostic that enables practitioners to explore these trade-offs without training a single model. Our work hinges on the observation that many widely-used fairness definitions can be expressed via the fairness-confusion tensor, an object obtained by splitting the traditional confusion matrix according to protected data attributes. Optimizing accuracy and fairness objectives directly over the elements in this tensor yields a data-dependent yet model-agnostic way of understanding several types of trade-offs. We further leverage this tensor-based perspective to generalize existing theoretical impossibility results to a wider range of fairness definitions. Finally, we demonstrate the usefulness of the proposed diagnostic on synthetic and real datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge