MMGAN: Generative Adversarial Networks for Multi-Modal Distributions

Paper and Code

Nov 15, 2019

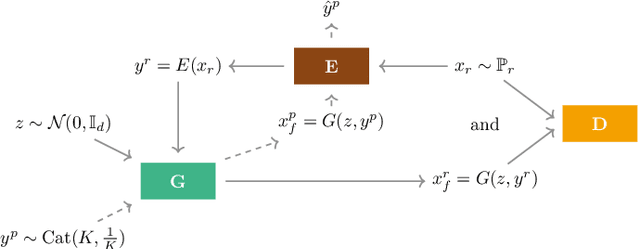

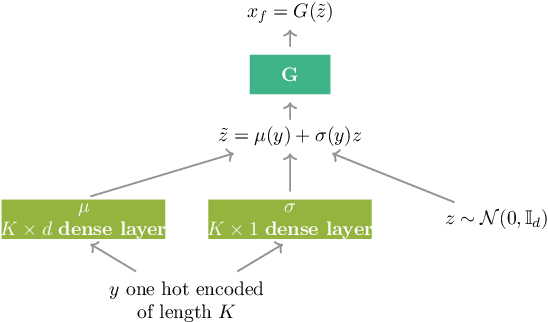

Over the past years, Generative Adversarial Networks (GANs) have shown a remarkable generation performance especially in image synthesis. Unfortunately, they are also known for having an unstable training process and might loose parts of the data distribution for heterogeneous input data. In this paper, we propose a novel GAN extension for multi-modal distribution learning (MMGAN). In our approach, we model the latent space as a Gaussian mixture model with a number of clusters referring to the number of disconnected data manifolds in the observation space, and include a clustering network, which relates each data manifold to one Gaussian cluster. Thus, the training gets more stable. Moreover, MMGAN allows for clustering real data according to the learned data manifold in the latent space. By a series of benchmark experiments, we illustrate that MMGAN outperforms competitive state-of-the-art models in terms of clustering performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge