MixPath: A Unified Approach for One-shot Neural Architecture Search

Paper and Code

Jan 22, 2020

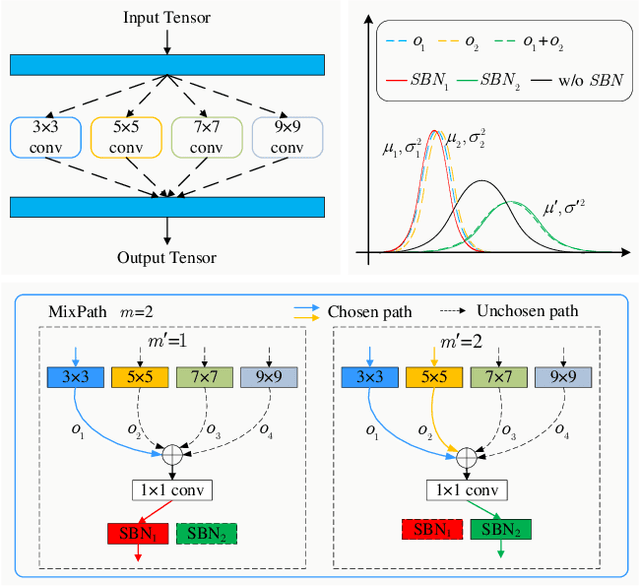

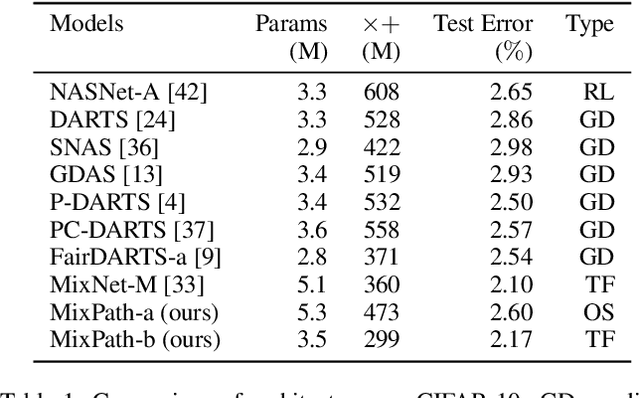

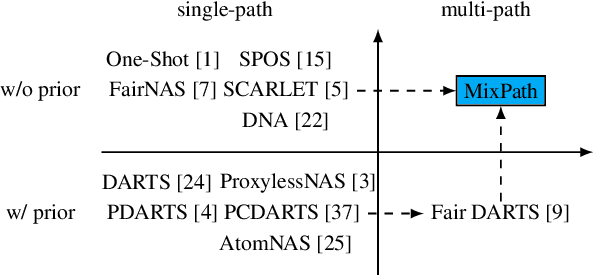

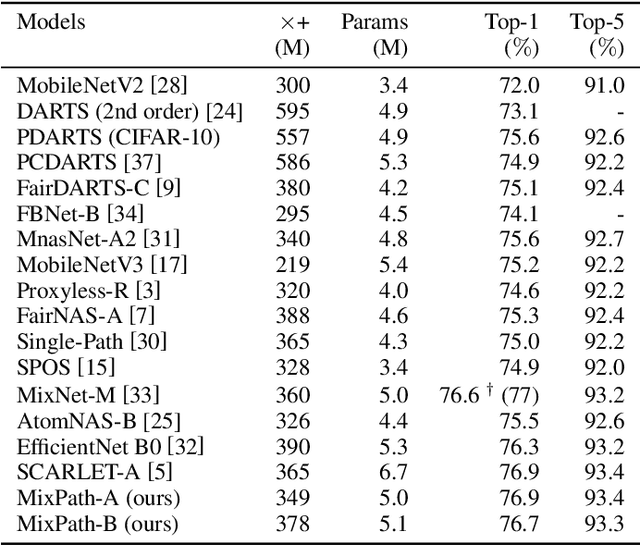

The expressiveness of search space is a key concern in neural architecture search (NAS). Previous approaches are mainly limited to searching for single-path networks. Incorporating multi-path search space with the current one-shot doctrine remains untackled. In this paper, we investigate the supernet behavior under multi-path's setting. We show that a trivial generalization from single-path to multi-path incurs severe feature inconsistency, which deteriorates both supernet training stability and model ranking ability. To remedy this degradation, we employ what we term as shadow batch normalizations (SBN) to catch changing statistics when activating different sets of paths. Extensive experiments on a common NAS benchmark, NAS-bench-101, show that SBN can boost ranking performance at neglectable cost. It breaks the Kendall Tau's record with a clear margin, reaching 0.597. Moreover, we take advantage of feature similarities on activated paths to largely reduce the number of needed SBNs. We call our method MixPath. When proxylessly searching on ImageNet, we obtain several lightweight models that outperform EfficientNet-B0 with fewer FLOPs, parameters and 300x fewer searching resources. Our code will be available https://github.com/xiaomi-automl/MixPath.git .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge