MixerGAN: An MLP-Based Architecture for Unpaired Image-to-Image Translation

Paper and Code

May 28, 2021

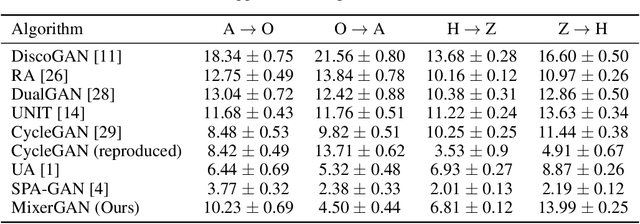

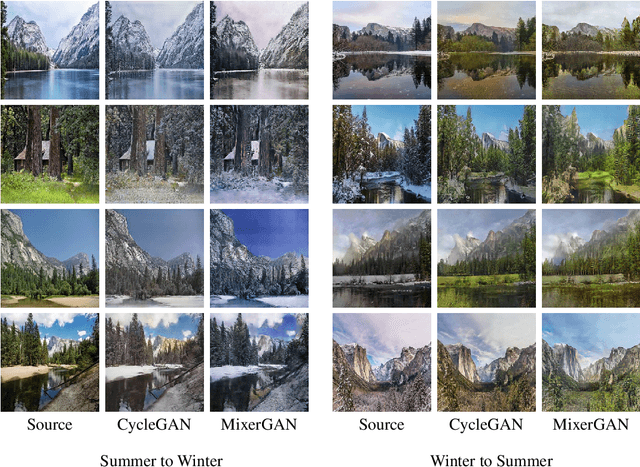

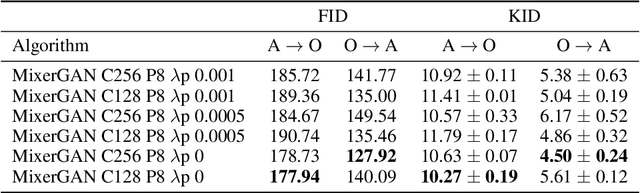

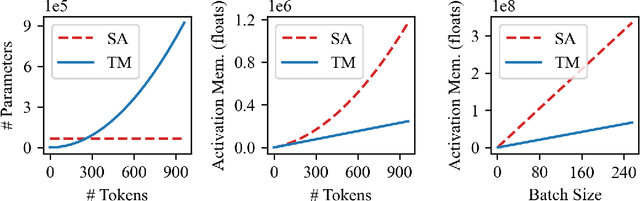

While attention-based transformer networks achieve unparalleled success in nearly all language tasks, the large number of tokens coupled with the quadratic activation memory usage makes them prohibitive for visual tasks. As such, while language-to-language translation has been revolutionized by the transformer model, convolutional networks remain the de facto solution for image-to-image translation. The recently proposed MLP-Mixer architecture alleviates some of the speed and memory issues associated with attention-based networks while still retaining the long-range connections that make transformer models desirable. Leveraging this efficient alternative to self-attention, we propose a new unpaired image-to-image translation model called MixerGAN: a simpler MLP-based architecture that considers long-distance relationships between pixels without the need for expensive attention mechanisms. Quantitative and qualitative analysis shows that MixerGAN achieves competitive results when compared to prior convolutional-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge