Mix-n-Match: Ensemble and Compositional Methods for Uncertainty Calibration in Deep Learning

Paper and Code

Mar 16, 2020

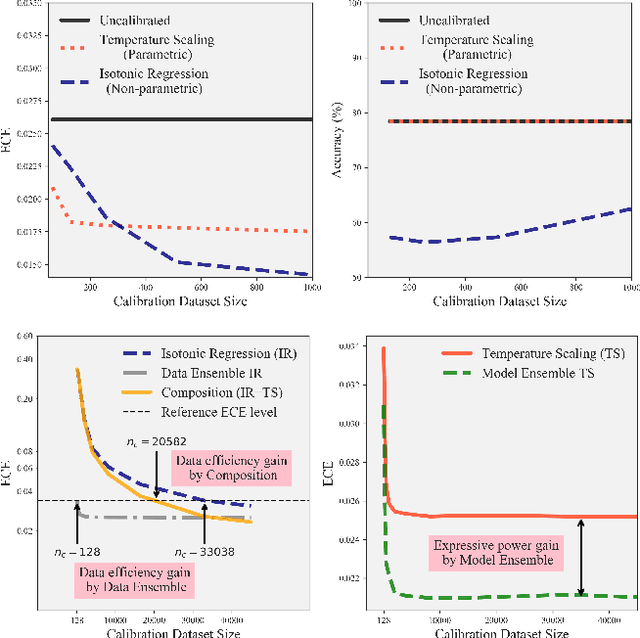

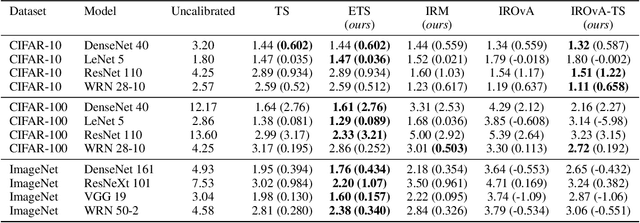

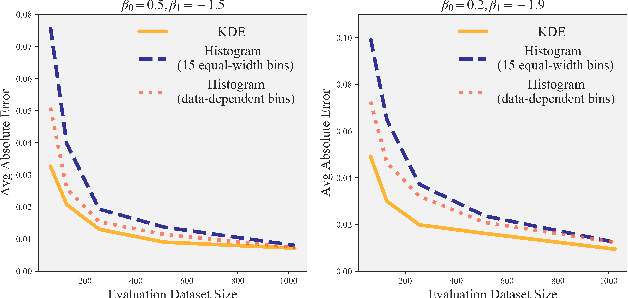

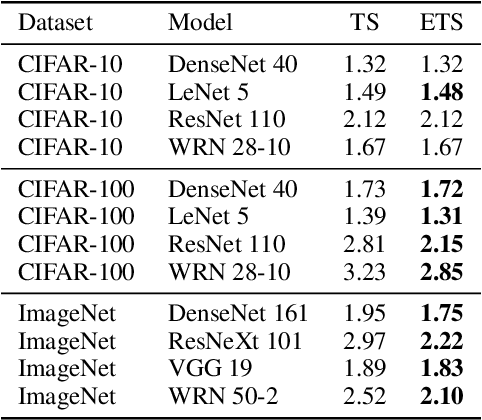

This paper studies the problem of post-hoc calibration of machine learning classifiers. We introduce the following desiderata for uncertainty calibration: (a) accuracy-preserving, (b) data-efficient, and (c) high expressive power. We show that none of the existing methods satisfy all three requirements, and demonstrate how Mix-n-Match calibration strategies (i.e., ensemble and composition) can help achieve remarkably better data-efficiency and expressive power while provably preserving classification accuracy of the original classifier. We also show that existing calibration error estimators (e.g., histogram-based ECE) are unreliable especially in small-data regime. Therefore, we propose an alternative data-efficient kernel density-based estimator for a reliable evaluation of the calibration performance and prove its asymptotically unbiasedness and consistency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge