Mitigating Domain Mismatch in Face Recognition Using Style Matching

Paper and Code

Feb 26, 2021

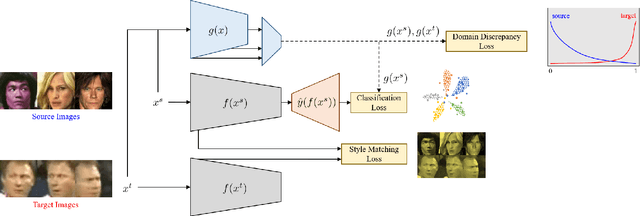

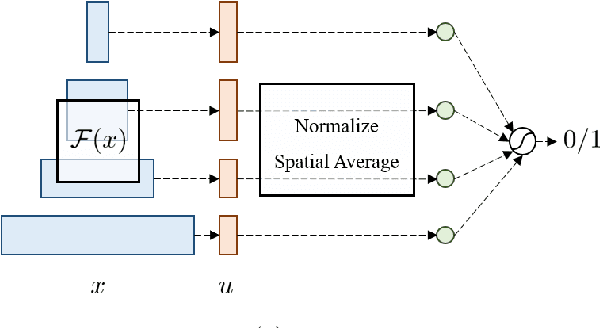

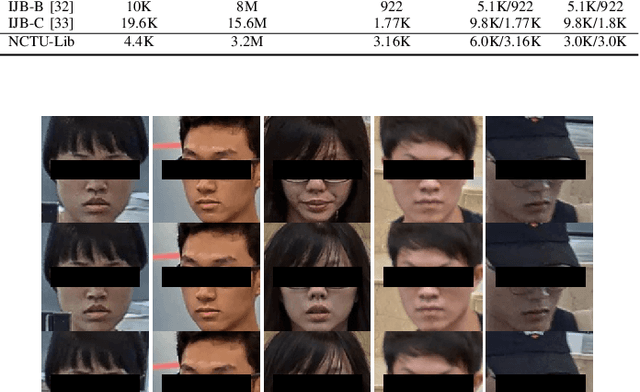

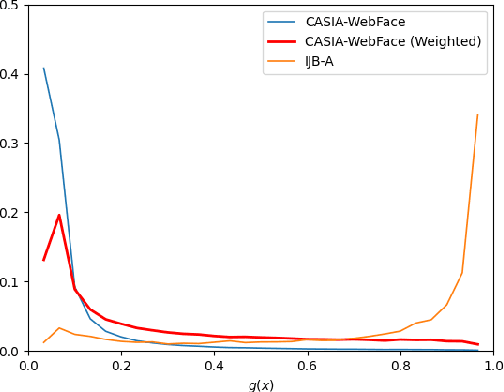

Despite outstanding performance on public benchmarks, face recognition still suffers due to domain mismatch between training (source) and testing (target) data. Furthermore, these domains are not shared classes, which complicates domain adaptation. Since this is also a fine-grained classification problem which does not strictly follow the low-density separation principle, conventional domain adaptation approaches do not resolve these problems. In this paper, we formulate domain mismatch in face recognition as a style mismatch problem for which we propose two methods. First, we design a domain discriminator with human-level judgment to mine target-like images in the training data to mitigate the domain gap. Second, we extract style representations in low-level feature maps of the backbone model, and match the style distributions of the two domains to find a common style representation. Evaluations on verification and open-set and closed-set identification protocols show that both methods yield good improvements, and that performance is more robust if they are combined. Our approach is competitive with related work, and its effectiveness is verified in a practical application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge