Mitigating Backdoors within Deep Neural Networks in Data-limited Configuration

Paper and Code

Nov 13, 2023

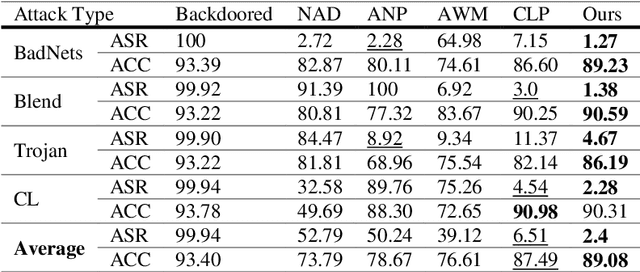

As the capacity of deep neural networks (DNNs) increases, their need for huge amounts of data significantly grows. A common practice is to outsource the training process or collect more data over the Internet, which introduces the risks of a backdoored DNN. A backdoored DNN shows normal behavior on clean data while behaving maliciously once a trigger is injected into a sample at the test time. In such cases, the defender faces multiple difficulties. First, the available clean dataset may not be sufficient for fine-tuning and recovering the backdoored DNN. Second, it is impossible to recover the trigger in many real-world applications without information about it. In this paper, we formulate some characteristics of poisoned neurons. This backdoor suspiciousness score can rank network neurons according to their activation values, weights, and their relationship with other neurons in the same layer. Our experiments indicate the proposed method decreases the chance of attacks being successful by more than 50% with a tiny clean dataset, i.e., ten clean samples for the CIFAR-10 dataset, without significantly deteriorating the model's performance. Moreover, the proposed method runs three times as fast as baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge