Minor changes make a difference: a case study on the consistency of UD-based dependency parsers

Paper and Code

Nov 30, 2021

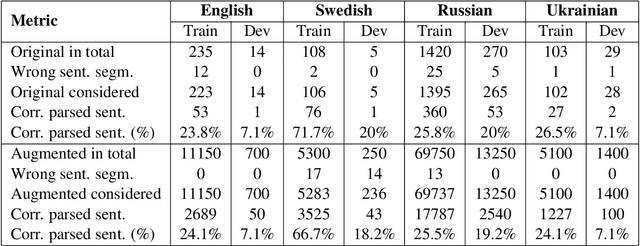

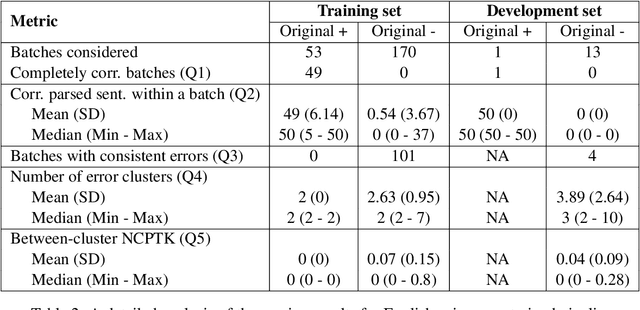

Many downstream applications are using dependency trees, and are thus relying on dependency parsers producing correct, or at least consistent, output. However, dependency parsers are trained using machine learning, and are therefore susceptible to unwanted inconsistencies due to biases in the training data. This paper explores the effects of such biases in four languages - English, Swedish, Russian, and Ukrainian - though an experiment where we study the effect of replacing numerals in sentences. We show that such seemingly insignificant changes in the input can cause large differences in the output, and suggest that data augmentation can remedy the problems.

* Accepted to the 5th Workshop on Universal Dependencies at SyntaxFest

2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge