Middle-level Fusion for Lightweight RGB-D Salient Object Detection

Paper and Code

May 06, 2021

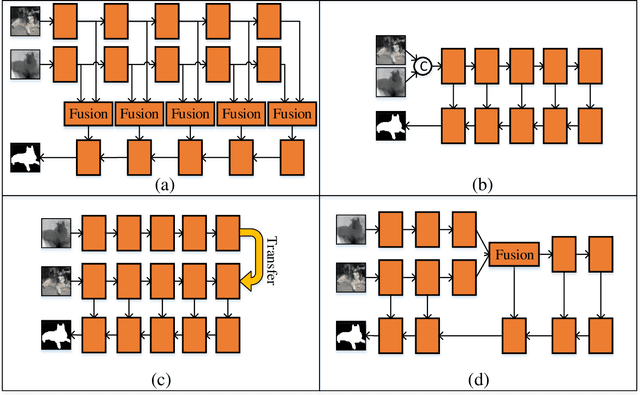

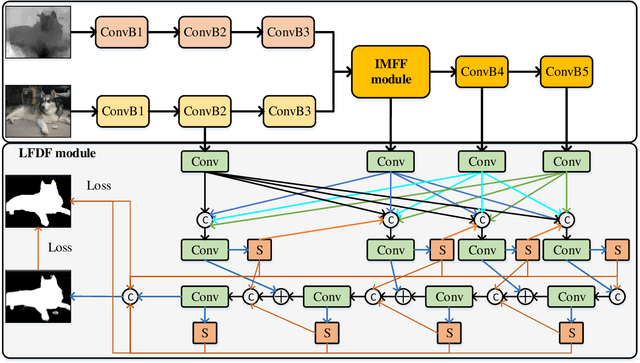

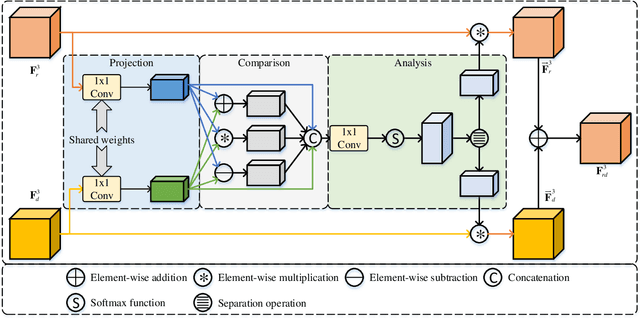

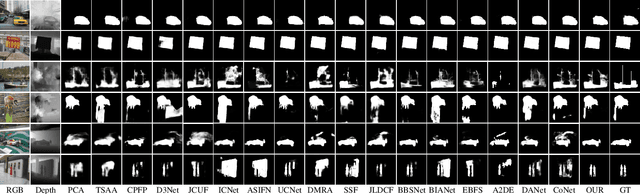

Most existing RGB-D salient object detection (SOD) models require large computational costs and memory consumption to accurately detect the salient objects. This limits the real-life applications of these RGB-D SOD models. To address this issue, a novel lightweight RGB-D SOD model is presented in this paper. Different from most existing models which usually employ the two-stream or single-stream structure, we propose to employ the middle-level fusion structure for designing lightweight RGB-D SOD model, due to the fact that the middle-level fusion structure can simultaneously exploit the modality-shared and modality-specific information as the two-stream structure and can significantly reduce the network's parameters as the single-stream structure. Based on this structure, a novel information-aware multi-modal feature fusion (IMFF) module is first designed to effectively capture the cross-modal complementary information. Then, a novel lightweight feature-level and decision-level feature fusion (LFDF) module is designed to aggregate the feature-level and the decision-level saliency information in different stages with less parameters. With IMFF and LFDF modules incorporated in the middle-level fusion structure, our proposed model has only 3.9M parameters and runs at 33 FPS. Furthermore, the experimental results on several benchmark datasets verify the effectiveness and superiority of the proposed method over some state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge