MetaPix: Domain Transfer for Semantic Segmentation by Meta Pixel Weighting

Paper and Code

Oct 05, 2021

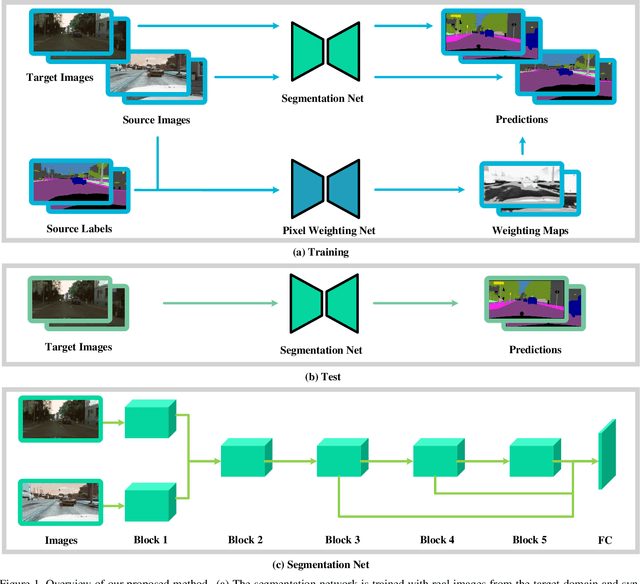

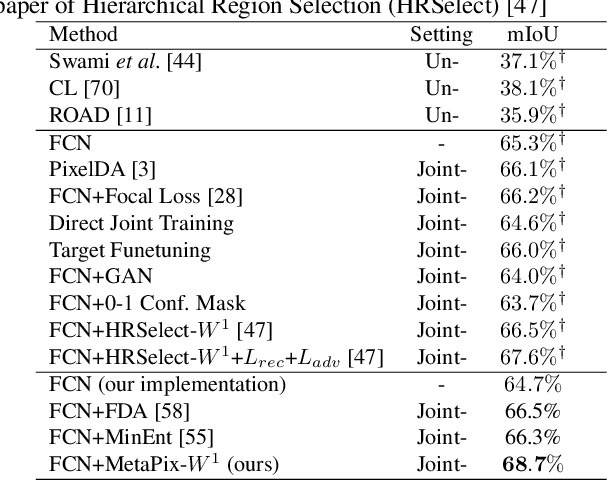

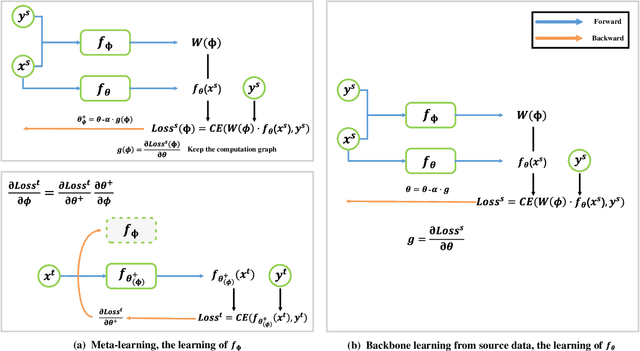

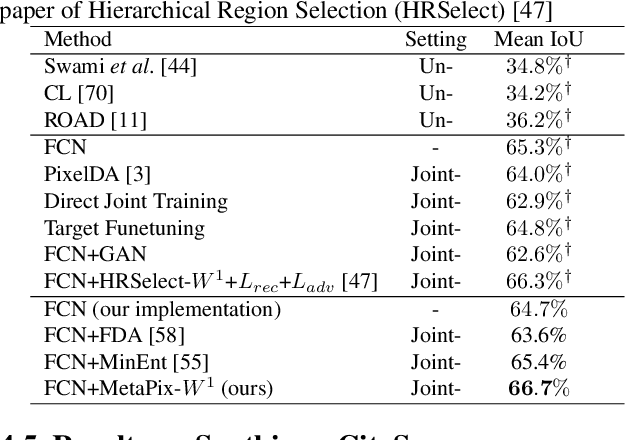

Training a deep neural model for semantic segmentation requires collecting a large amount of pixel-level labeled data. To alleviate the data scarcity problem presented in the real world, one could utilize synthetic data whose label is easy to obtain. Previous work has shown that the performance of a semantic segmentation model can be improved by training jointly with real and synthetic examples with a proper weighting on the synthetic data. Such weighting was learned by a heuristic to maximize the similarity between synthetic and real examples. In our work, we instead learn a pixel-level weighting of the synthetic data by meta-learning, i.e., the learning of weighting should only be minimizing the loss on the target task. We achieve this by gradient-on-gradient technique to propagate the target loss back into the parameters of the weighting model. The experiments show that our method with only one single meta module can outperform a complicated combination of an adversarial feature alignment, a reconstruction loss, plus a hierarchical heuristic weighting at pixel, region and image levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge