Mesh-Based Solutions for Nonparametric Penalized Regression

Paper and Code

Dec 07, 2021

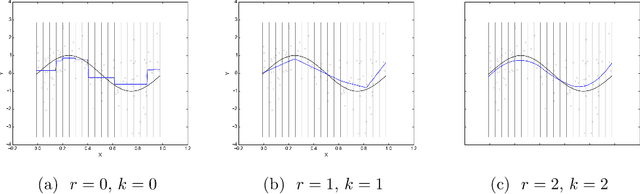

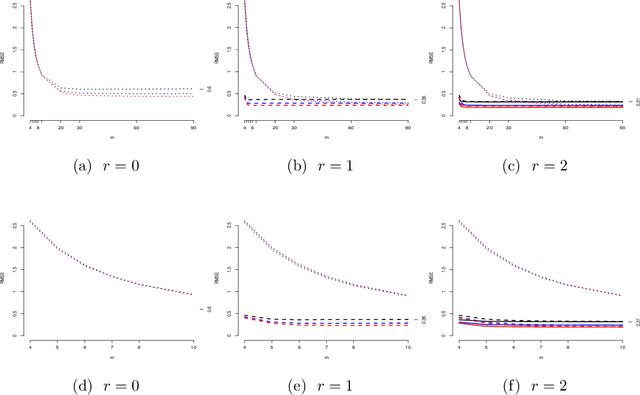

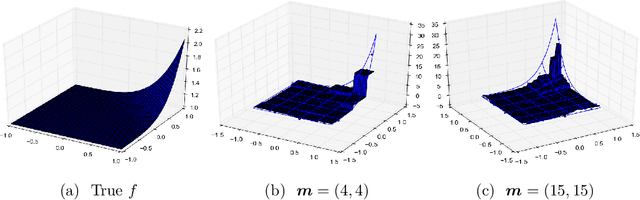

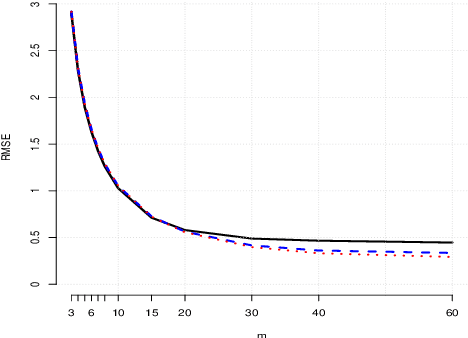

It is often of interest to estimate regression functions non-parametrically. Penalized regression (PR) is one statistically-effective, well-studied solution to this problem. Unfortunately, in many cases, finding exact solutions to PR problems is computationally intractable. In this manuscript, we propose a mesh-based approximate solution (MBS) for those scenarios. MBS transforms the complicated functional minimization of NPR, to a finite parameter, discrete convex minimization; and allows us to leverage the tools of modern convex optimization. We show applications of MBS in a number of explicit examples (including both uni- and multi-variate regression), and explore how the number of parameters must increase with our sample-size in order for MBS to maintain the rate-optimality of NPR. We also give an efficient algorithm to minimize the MBS objective while effectively leveraging the sparsity inherent in MBS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge