Merging Models with Fisher-Weighted Averaging

Paper and Code

Nov 18, 2021

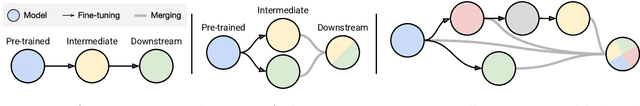

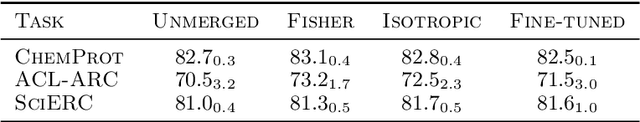

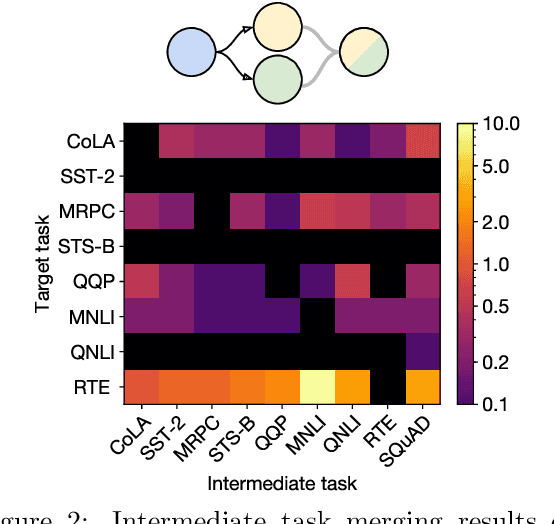

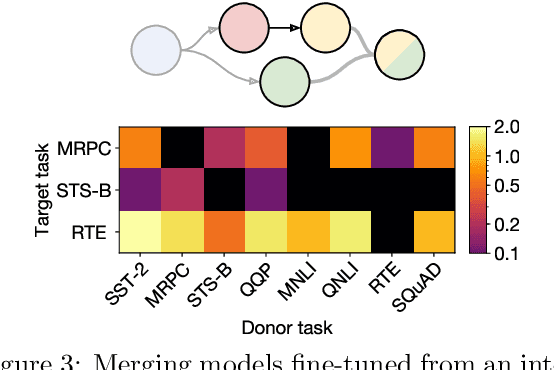

Transfer learning provides a way of leveraging knowledge from one task when learning another task. Performing transfer learning typically involves iteratively updating a model's parameters through gradient descent on a training dataset. In this paper, we introduce a fundamentally different method for transferring knowledge across models that amounts to "merging" multiple models into one. Our approach effectively involves computing a weighted average of the models' parameters. We show that this averaging is equivalent to approximately sampling from the posteriors of the model weights. While using an isotropic Gaussian approximation works well in some cases, we also demonstrate benefits by approximating the precision matrix via the Fisher information. In sum, our approach makes it possible to combine the "knowledge" in multiple models at an extremely low computational cost compared to standard gradient-based training. We demonstrate that model merging achieves comparable performance to gradient descent-based transfer learning on intermediate-task training and domain adaptation problems. We also show that our merging procedure makes it possible to combine models in previously unexplored ways. To measure the robustness of our approach, we perform an extensive ablation on the design of our algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge