Memory Efficient Adaptive Attention For Multiple Domain Learning

Paper and Code

Oct 21, 2021

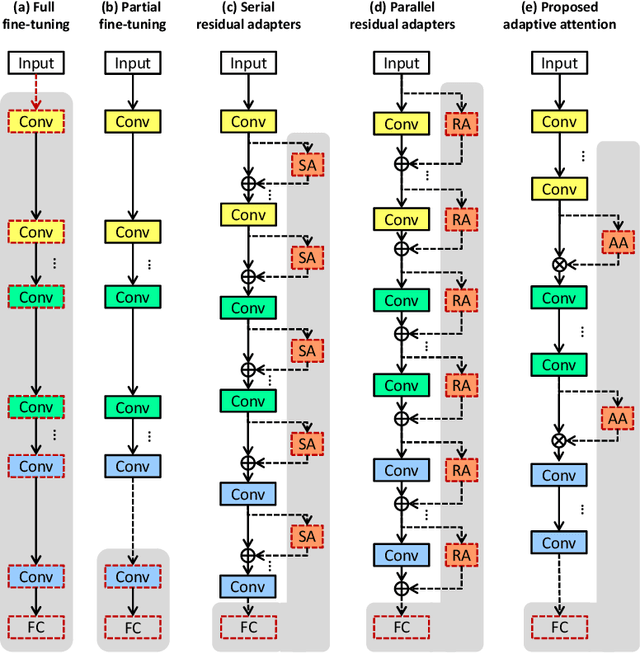

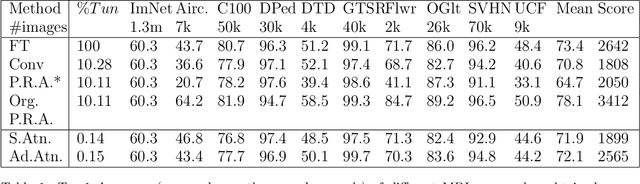

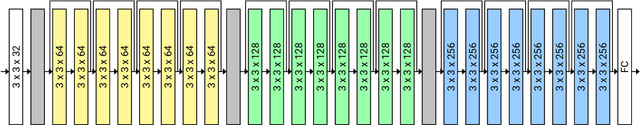

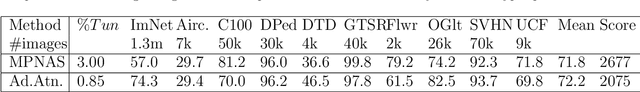

Training CNNs from scratch on new domains typically demands large numbers of labeled images and computations, which is not suitable for low-power hardware. One way to reduce these requirements is to modularize the CNN architecture and freeze the weights of the heavier modules, that is, the lower layers after pre-training. Recent studies have proposed alternative modular architectures and schemes that lead to a reduction in the number of trainable parameters needed to match the accuracy of fully fine-tuned CNNs on new domains. Our work suggests that a further reduction in the number of trainable parameters by an order of magnitude is possible. Furthermore, we propose that new modularization techniques for multiple domain learning should also be compared on other realistic metrics, such as the number of interconnections needed between the fixed and trainable modules, the number of training samples needed, the order of computations required and the robustness to partial mislabeling of the training data. On all of these criteria, the proposed architecture demonstrates advantages over or matches the current state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge