Memory-based Controllers for Efficient Data-driven Control of Soft Robots

Paper and Code

Sep 19, 2023

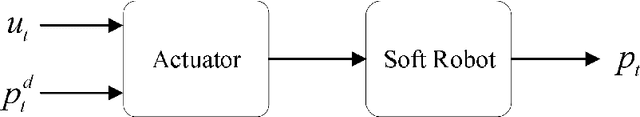

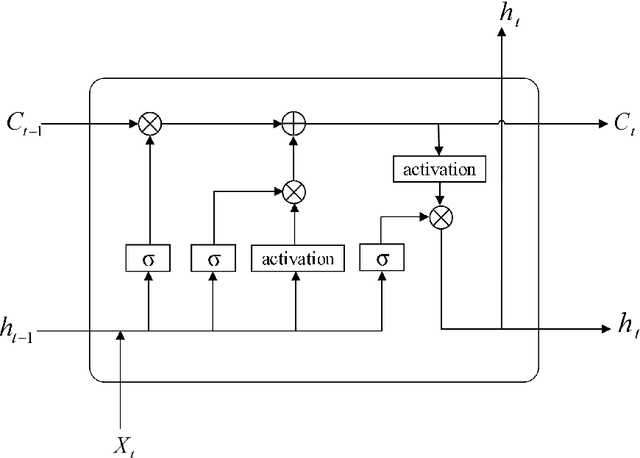

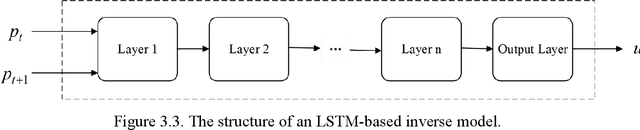

Controller design for soft robots is challenging due to nonlinear deformation and high degrees of freedom of flexible material. The data-driven approach is a promising solution to the controller design problem for soft robots. However, the existing data-driven controller design methods for soft robots suffer from two drawbacks: (i) they require excessively long training time, and (ii) they may result in potentially inefficient controllers. This paper addresses these issues by developing two memory-based controllers for soft robots that can be trained in a data-driven fashion: the finite memory controller (FMC) approach and the long short-term memory (LSTM) based approach. An FMC stores the tracking errors at different time instances and computes the actuation signal according to a weighted sum of the stored tracking errors. We develop three reinforcement learning algorithms for computing the optimal weights of an FMC using the Q-learning, soft actor-critic, and deterministic policy gradient (DDPG) methods. An LSTM-based controller is composed of an LSTM network where the inputs of the network are the robot's desired configuration and current configuration. The LSTM network computes the required actuation signal for the soft robot to follow the desired configuration. We study the performance of the proposed approaches in controlling a soft finger where, as benchmarks, we use the existing reinforcement learning (RL) based controllers and proportional-integral-derivative (PID) controllers. Our numerical results show that the training time of the proposed memory-based controllers is significantly shorter than that of the classical RL-based controllers. Moreover, the proposed controllers achieve a smaller tracking error compared with the classical RL algorithms and the PID controller.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge