Memetic Algorithms Beat Evolutionary Algorithms on the Class of Hurdle Problems

Paper and Code

Apr 17, 2018

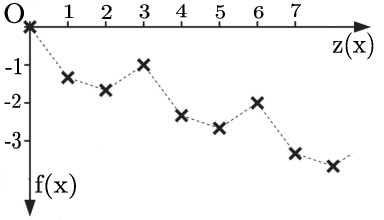

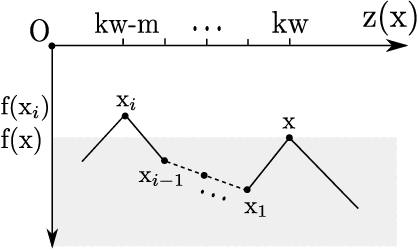

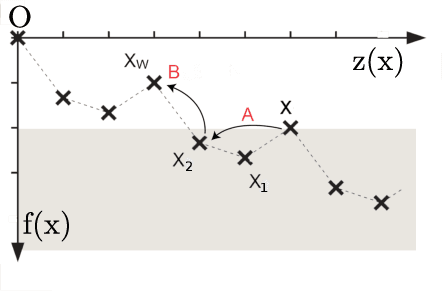

Memetic algorithms are popular hybrid search heuristics that integrate local search into the search process of an evolutionary algorithm in order to combine the advantages of rapid exploitation and global optimisation. However, these algorithms are not well understood and the field is lacking a solid theoretical foundation that explains when and why memetic algorithms are effective. We provide a rigorous runtime analysis of a simple memetic algorithm, the $(1+1)$ MA, on the Hurdle problem class, a landscape class of tuneable difficulty that shows a "big valley structure", a characteristic feature of many hard problems from combinatorial optimisation. The only parameter of this class is the hurdle width w, which describes the length of fitness valleys that have to be overcome. We show that the $(1+1)$ EA requires $\Theta(n^w)$ expected function evaluations to find the optimum, whereas the $(1+1)$ MA with best-improvement and first-improvement local search can find the optimum in $\Theta(n^2+n^3/w^2)$ and $\Theta(n^3/w^2)$ function evaluations, respectively. Surprisingly, while increasing the hurdle width makes the problem harder for evolutionary algorithms, the problem becomes easier for memetic algorithms. We discuss how these findings can explain and illustrate the success of memetic algorithms for problems with big valley structures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge