MBGDT:Robust Mini-Batch Gradient Descent

Paper and Code

Jun 14, 2022

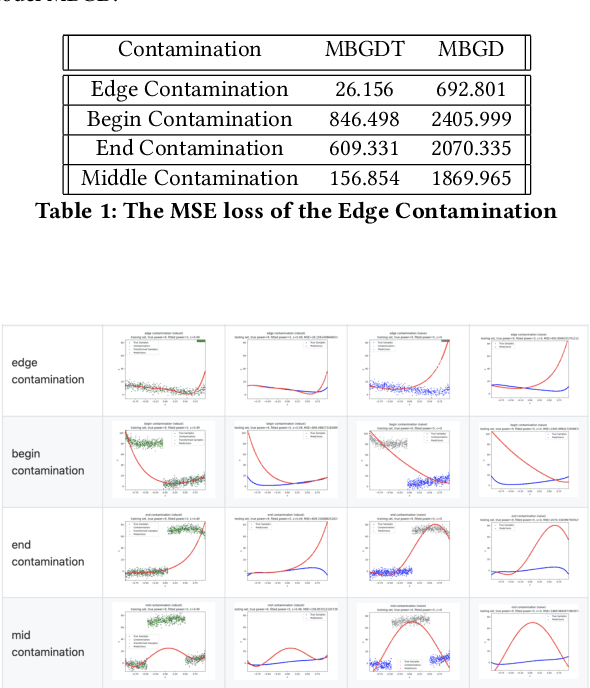

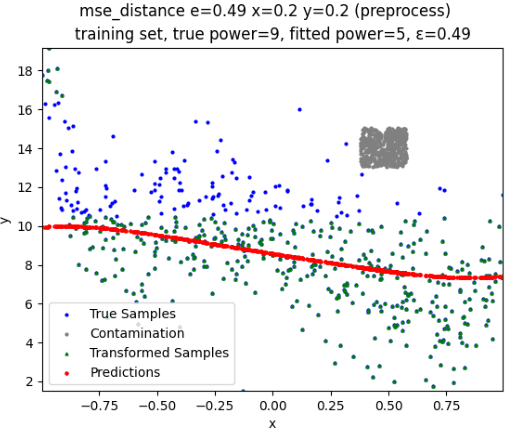

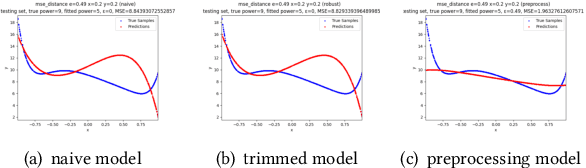

In high dimensions, most machine learning method perform fragile even there are a little outliers. To address this, we hope to introduce a new method with the base learner, such as Bayesian regression or stochastic gradient descent to solve the problem of the vulnerability in the model. Because the mini-batch gradient descent allows for a more robust convergence than the batch gradient descent, we work a method with the mini-batch gradient descent, called Mini-Batch Gradient Descent with Trimming (MBGDT). Our method show state-of-art performance and have greater robustness than several baselines when we apply our method in designed dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge