MatSat: a matrix-based differentiable SAT solver

Paper and Code

Aug 14, 2021

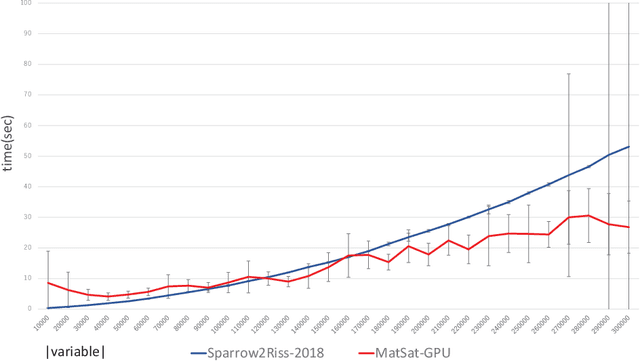

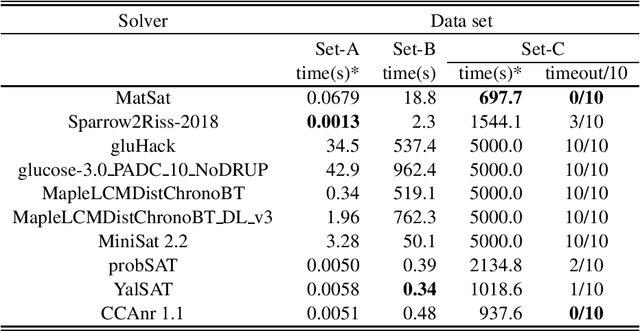

We propose a new approach to SAT solving which solves SAT problems in vector spaces as a cost minimization problem of a non-negative differentiable cost function J^sat. In our approach, a solution, i.e., satisfying assignment, for a SAT problem in n variables is represented by a binary vector u in {0,1}^n that makes J^sat(u) zero. We search for such u in a vector space R^n by cost minimization, i.e., starting from an initial u_0 and minimizing J to zero while iteratively updating u by Newton's method. We implemented our approach as a matrix-based differential SAT solver MatSat. Although existing main-stream SAT solvers decide each bit of a solution assignment one by one, be they of conflict driven clause learning (CDCL) type or of stochastic local search (SLS) type, MatSat fundamentally differs from them in that it continuously approach a solution in a vector space. We conducted an experiment to measure the scalability of MatSat with random 3-SAT problems in which MatSat could find a solution up to n=10^5 variables. We also compared MatSat with four state-of-the-art SAT solvers including winners of SAT competition 2018 and SAT Race 2019 in terms of time for finding a solution, using a random benchmark set from SAT 2018 competition and an artificial random 3-SAT instance set. The result shows that MatSat comes in second in both test sets and outperforms all the CDCL type solvers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge