Masked Sensory-Temporal Attention for Sensor Generalization in Quadruped Locomotion

Paper and Code

Sep 05, 2024

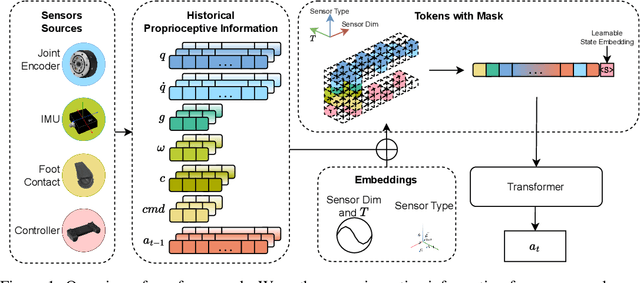

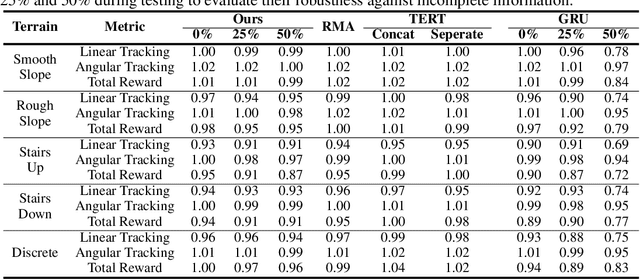

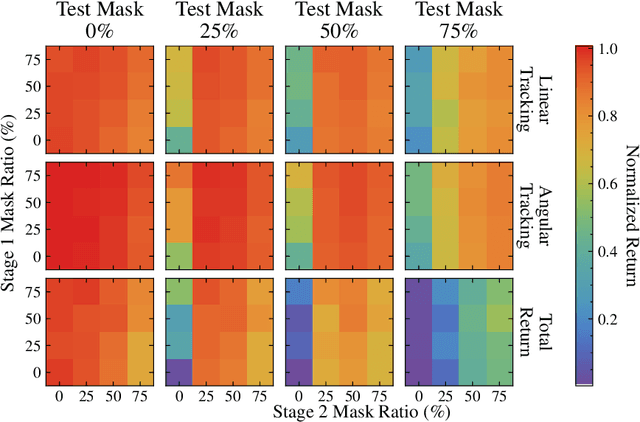

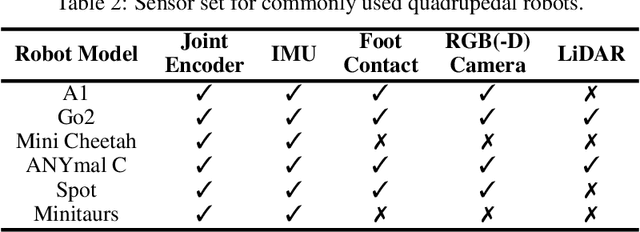

With the rising focus on quadrupeds, a generalized policy capable of handling different robot models and sensory inputs will be highly beneficial. Although several methods have been proposed to address different morphologies, it remains a challenge for learning-based policies to manage various combinations of proprioceptive information. This paper presents Masked Sensory-Temporal Attention (MSTA), a novel transformer-based model with masking for quadruped locomotion. It employs direct sensor-level attention to enhance sensory-temporal understanding and handle different combinations of sensor data, serving as a foundation for incorporating unseen information. This model can effectively understand its states even with a large portion of missing information, and is flexible enough to be deployed on a physical system despite the long input sequence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge