Markov Chain Importance Sampling - a highly efficient estimator for MCMC

Paper and Code

May 23, 2018

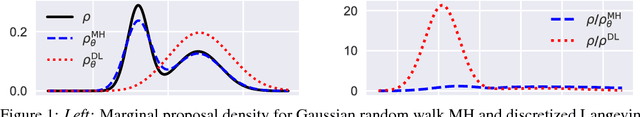

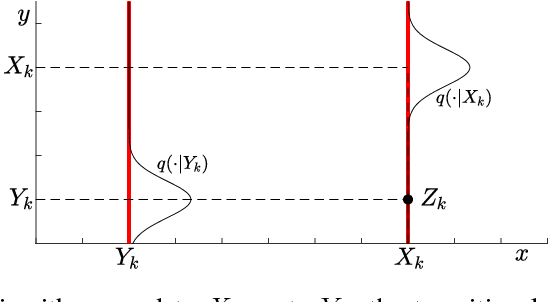

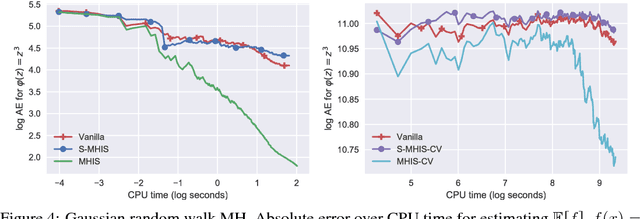

Markov chain algorithms are ubiquitous in machine learning and statistics and many other disciplines. In this work we present a novel estimator applicable to several classes of Markov chains, dubbed Markov chain importance sampling (MCIS). For a broad class of Metropolis-Hastings algorithms, MCIS efficiently makes use of rejected proposals. For discretized Langevin diffusions, it provides a novel way of correcting the discretization error. Our estimator satisfies a central limit theorem and improves on error per CPU cycle, often to a large extent. As a by-product it enables estimating the normalizing constant, an important quantity in Bayesian machine learning and statistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge