Mapless Navigation: Learning UAVs Motion forExploration of Unknown Environments

Paper and Code

Oct 04, 2021

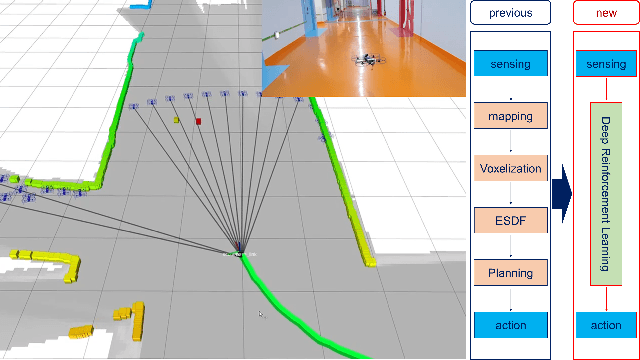

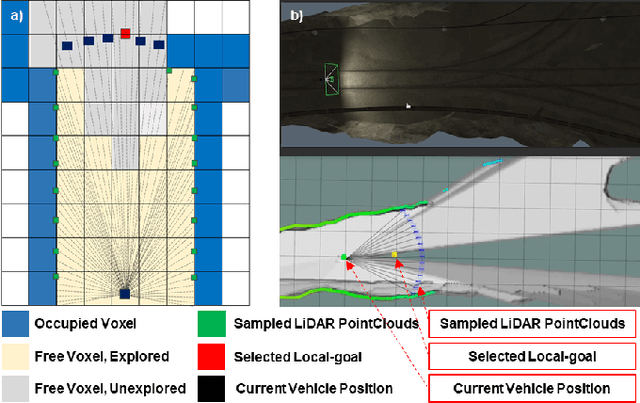

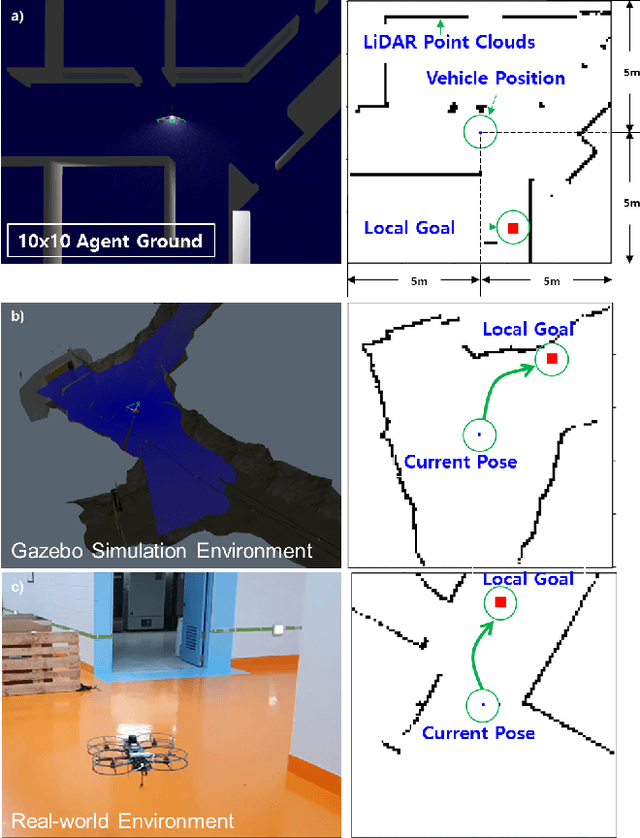

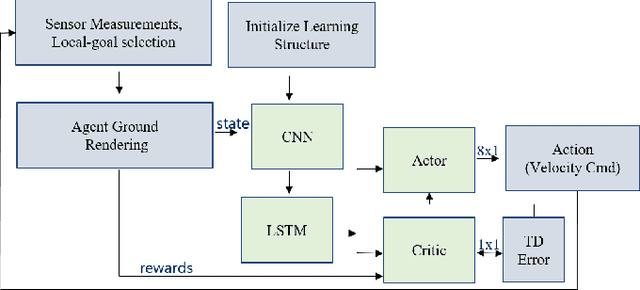

This study presents a new methodology for learning-based motion planning for autonomous exploration using aerial robots. Through the reinforcement learning method of learning through trial and error, the action policy is derived that can guide autonomous exploration of underground and tunnel environments. A new Markov decision process state is designed to learn the robot's action policy by using simulation only, and the results are applied to the real-world environment without further learning. Reduce the need for the precision map in grid-based path planner and achieve map-less navigation. The proposed method can have a path with less computing cost than the grid-based planner but has similar performance. The trained action policy is broadly evaluated in both simulation and field trials related to autonomous exploration of underground mines or indoor spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge