Manifold Learning Using Kernel Density Estimation and Local Principal Components Analysis

Paper and Code

Sep 11, 2017

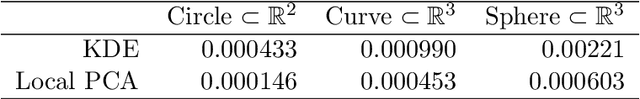

We consider the problem of recovering a $d-$dimensional manifold $\mathcal{M} \subset \mathbb{R}^n$ when provided with noiseless samples from $\mathcal{M}$. There are many algorithms (e.g., Isomap) that are used in practice to fit manifolds and thus reduce the dimensionality of a given data set. Ideally, the estimate $\mathcal{M}_\mathrm{put}$ of $\mathcal{M}$ should be an actual manifold of a certain smoothness; furthermore, $\mathcal{M}_\mathrm{put}$ should be arbitrarily close to $\mathcal{M}$ in Hausdorff distance given a large enough sample. Generally speaking, existing manifold learning algorithms do not meet these criteria. Fefferman, Mitter, and Narayanan (2016) have developed an algorithm whose output is provably a manifold. The key idea is to define an approximate squared-distance function (asdf) to $\mathcal{M}$. Then, $\mathcal{M}_\mathrm{put}$ is given by the set of points where the gradient of the asdf is orthogonal to the subspace spanned by the largest $n - d$ eigenvectors of the Hessian of the asdf. As long as the asdf meets certain regularity conditions, $\mathcal{M}_\mathrm{put}$ is a manifold that is arbitrarily close in Hausdorff distance to $\mathcal{M}$. In this paper, we define two asdfs that can be calculated from the data and show that they meet the required regularity conditions. The first asdf is based on kernel density estimation, and the second is based on estimation of tangent spaces using local principal components analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge