Mamba Hawkes Process

Paper and Code

Jul 07, 2024

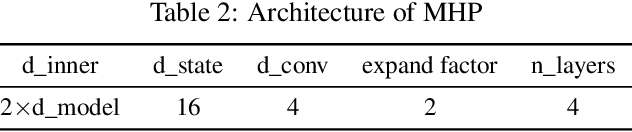

Irregular and asynchronous event sequences are prevalent in many domains, such as social media, finance, and healthcare. Traditional temporal point processes (TPPs), like Hawkes processes, often struggle to model mutual inhibition and nonlinearity effectively. While recent neural network models, including RNNs and Transformers, address some of these issues, they still face challenges with long-term dependencies and computational efficiency. In this paper, we introduce the Mamba Hawkes Process (MHP), which leverages the Mamba state space architecture to capture long-range dependencies and dynamic event interactions. Our results show that MHP outperforms existing models across various datasets. Additionally, we propose the Mamba Hawkes Process Extension (MHP-E), which combines Mamba and Transformer models to enhance predictive capabilities. We present the novel application of the Mamba architecture to Hawkes processes, a flexible and extensible model structure, and a theoretical analysis of the synergy between state space models and Hawkes processes. Experimental results demonstrate the superior performance of both MHP and MHP-E, advancing the field of temporal point process modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge