Making Attention Mechanisms More Robust and Interpretable with Virtual Adversarial Training for Semi-Supervised Text Classification

Paper and Code

Apr 18, 2021

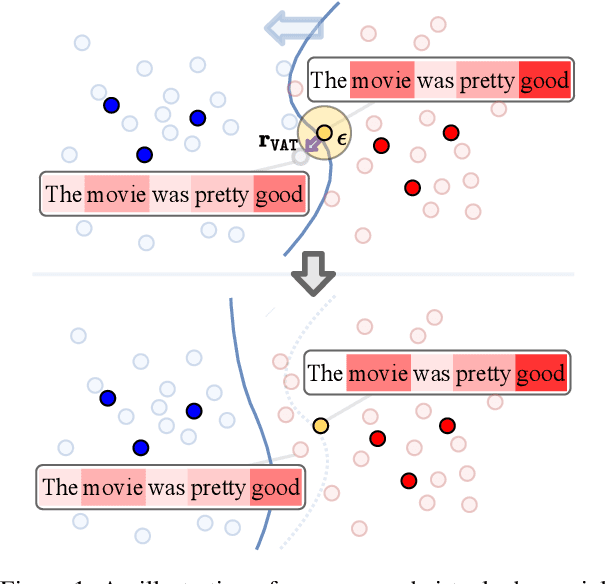

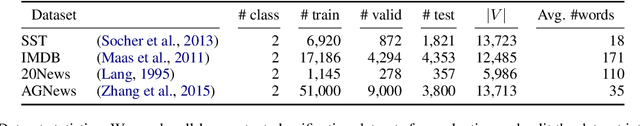

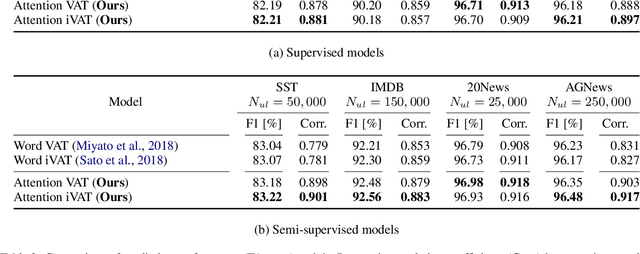

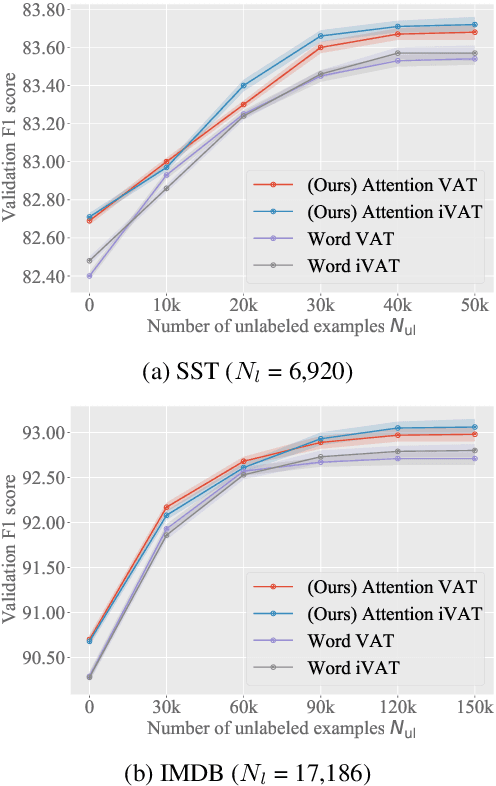

We propose a new general training technique for attention mechanisms based on virtual adversarial training (VAT). VAT can compute adversarial perturbations from unlabeled data in a semi-supervised setting for the attention mechanisms that have been reported in previous studies to be vulnerable to perturbations. Empirical experiments reveal that our technique (1) provides significantly better prediction performance compared to not only conventional adversarial training-based techniques but also VAT-based techniques in a semi-supervised setting, (2) demonstrates a stronger correlation with the word importance and better agreement with evidence provided by humans, and (3) gains in performance with increasing amounts of unlabeled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge