MAGIX: Model Agnostic Globally Interpretable Explanations

Paper and Code

Jun 15, 2018

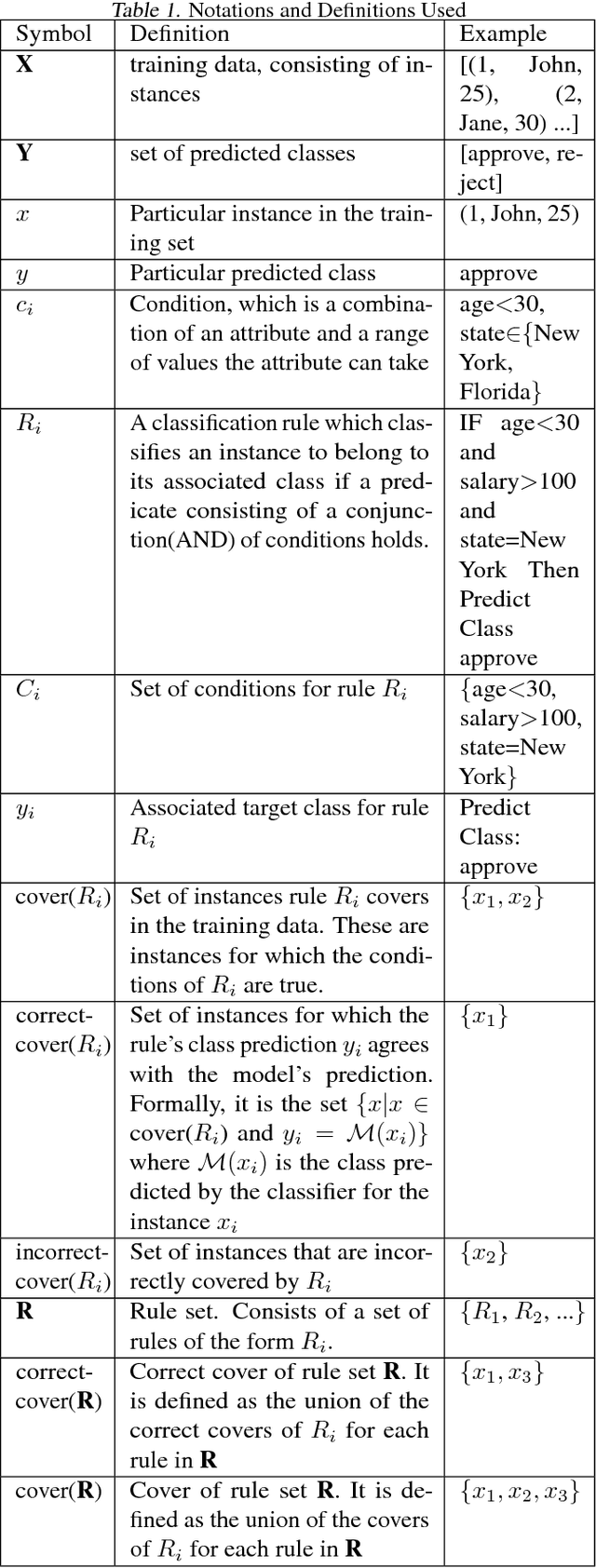

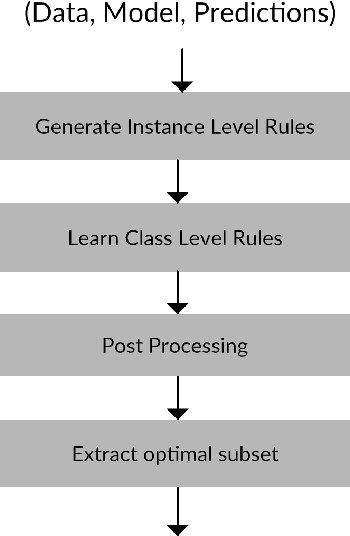

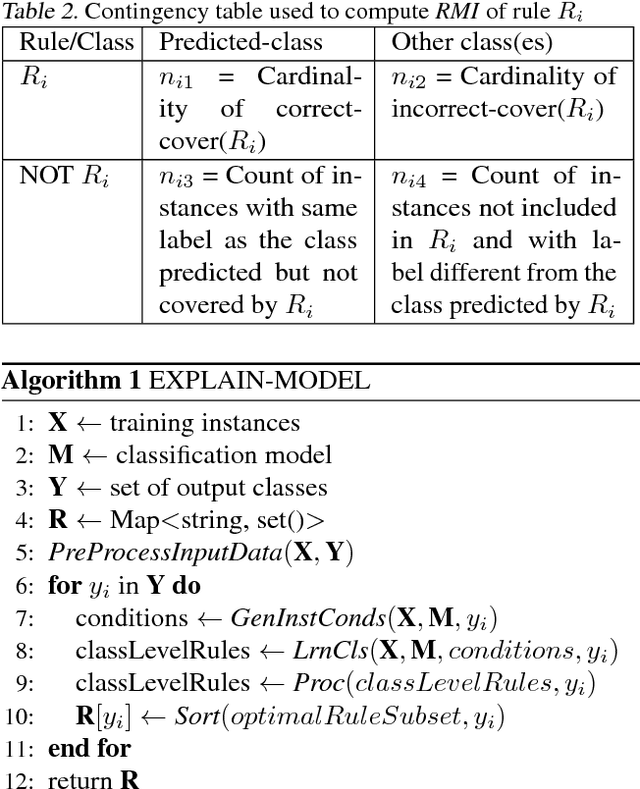

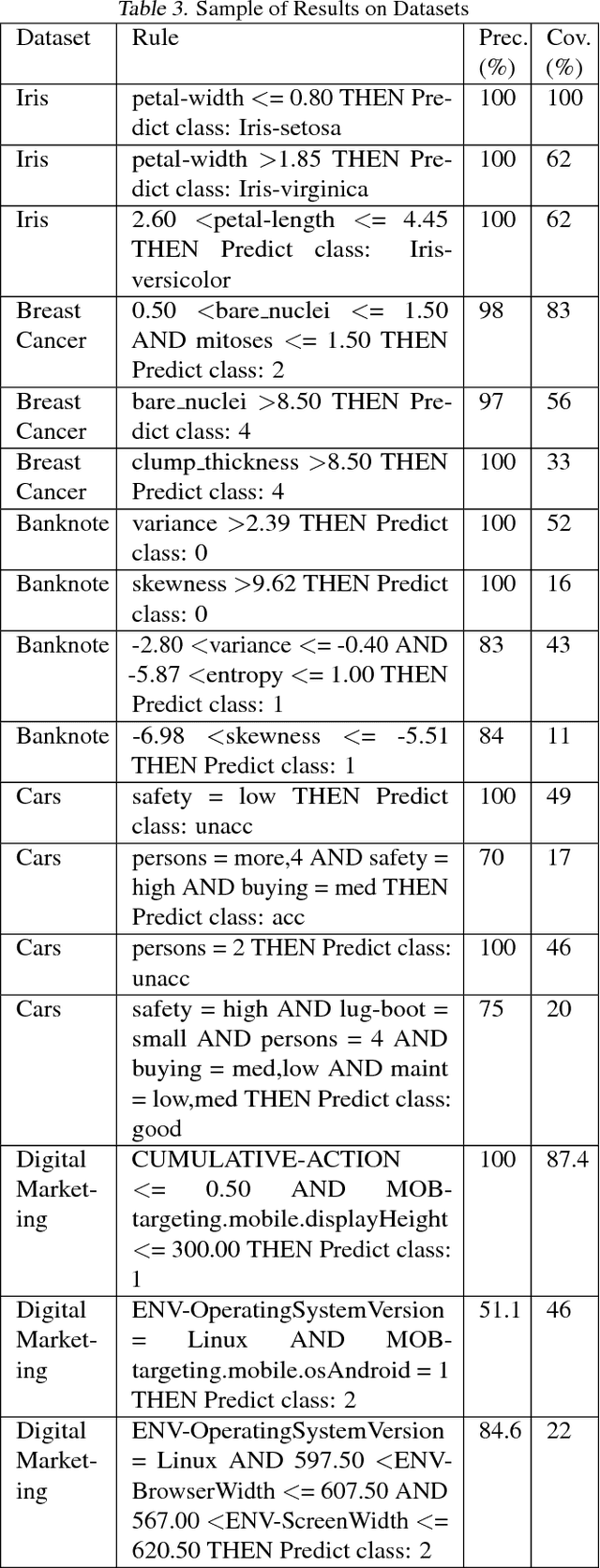

Explaining the behavior of a black box machine learning model at the instance level is useful for building trust. However, it is also important to understand how the model behaves globally. Such an understanding provides insight into both the data on which the model was trained and the patterns that it learned. We present here an approach that learns if-then rules to globally explain the behavior of black box machine learning models that have been used to solve classification problems. The approach works by first extracting conditions that were important at the instance level and then evolving rules through a genetic algorithm with an appropriate fitness function. Collectively, these rules represent the patterns followed by the model for decisioning and are useful for understanding its behavior. We demonstrate the validity and usefulness of the approach by interpreting black box models created using publicly available data sets as well as a private digital marketing data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge