MAEVI: Motion Aware Event-Based Video Frame Interpolation

Paper and Code

Mar 03, 2023

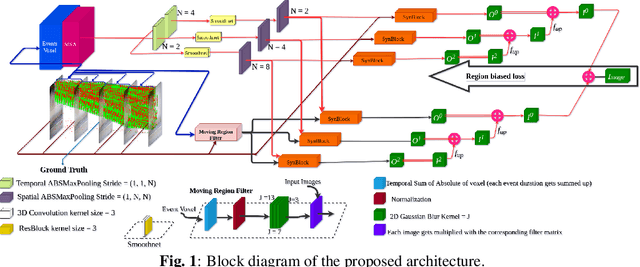

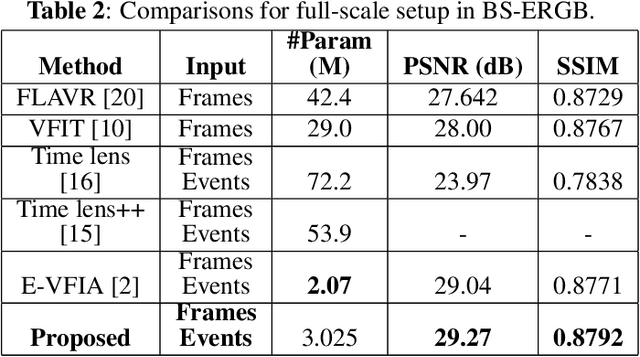

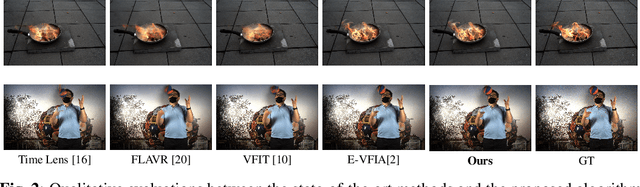

Utilization of event-based cameras is expected to improve the visual quality of video frame interpolation solutions. We introduce a learning-based method to exploit moving region boundaries in a video sequence to increase the overall interpolation quality.Event cameras allow us to determine moving areas precisely; and hence, better video frame interpolation quality can be achieved by emphasizing these regions using an appropriate loss function. The results show a notable average \textit{PSNR} improvement of $1.3$ dB for the tested data sets, as well as subjectively more pleasing visual results with less ghosting and blurry artifacts.

* Submitted to International Conference on Image Processing (ICIP) 2023

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge