M-EBM: Towards Understanding the Manifolds of Energy-Based Models

Paper and Code

Mar 08, 2023

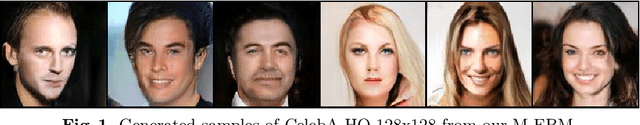

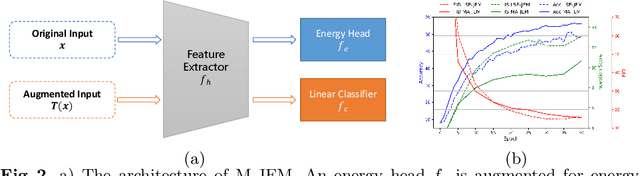

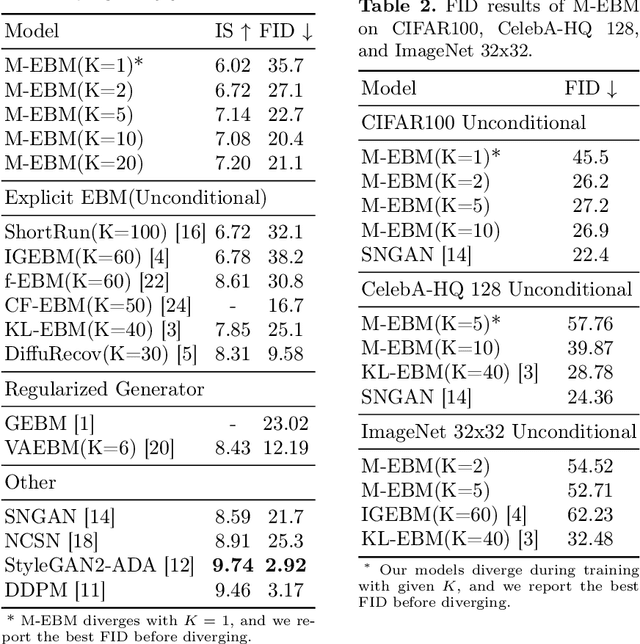

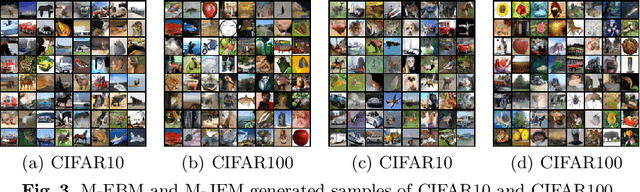

Energy-based models (EBMs) exhibit a variety of desirable properties in predictive tasks, such as generality, simplicity and compositionality. However, training EBMs on high-dimensional datasets remains unstable and expensive. In this paper, we present a Manifold EBM (M-EBM) to boost the overall performance of unconditional EBM and Joint Energy-based Model (JEM). Despite its simplicity, M-EBM significantly improves unconditional EBMs in training stability and speed on a host of benchmark datasets, such as CIFAR10, CIFAR100, CelebA-HQ, and ImageNet 32x32. Once class labels are available, label-incorporated M-EBM (M-JEM) further surpasses M-EBM in image generation quality with an over 40% FID improvement, while enjoying improved accuracy. The code can be found at https://github.com/sndnyang/mebm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge