Lunar Terrain Relative Navigation Using a Convolutional Neural Network for Visual Crater Detection

Paper and Code

Jul 15, 2020

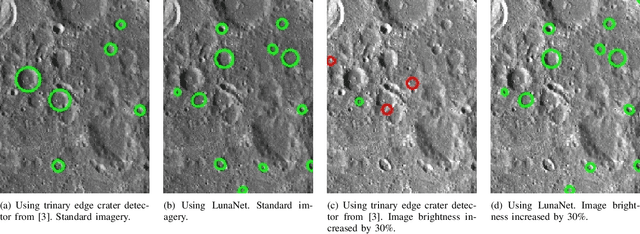

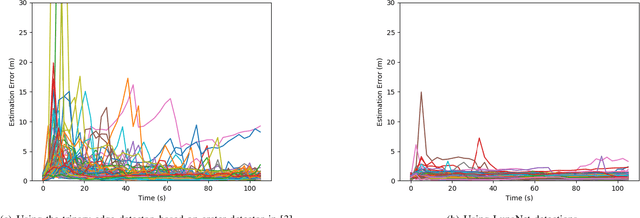

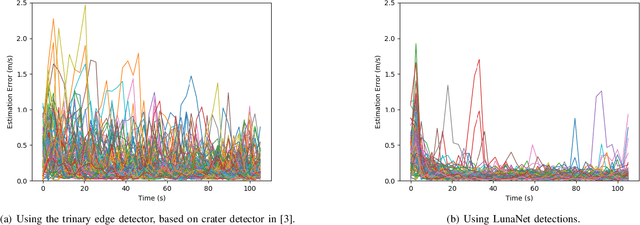

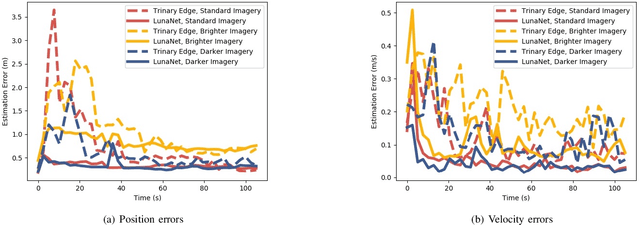

Terrain relative navigation can improve the precision of a spacecraft's position estimate by detecting global features that act as supplementary measurements to correct for drift in the inertial navigation system. This paper presents a system that uses a convolutional neural network (CNN) and image processing methods to track the location of a simulated spacecraft with an extended Kalman filter (EKF). The CNN, called LunaNet, visually detects craters in the simulated camera frame and those detections are matched to known lunar craters in the region of the current estimated spacecraft position. These matched craters are treated as features that are tracked using the EKF. LunaNet enables more reliable position tracking over a simulated trajectory due to its greater robustness to changes in image brightness and more repeatable crater detections from frame to frame throughout a trajectory. LunaNet combined with an EKF produces a decrease of 60% in the average final position estimation error and a decrease of 25% in average final velocity estimation error compared to an EKF using an image processing-based crater detection method when tested on trajectories using images of standard brightness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge