LSTM-based Online Learning: An Efficient EKF Based Algorithm with a Convergence Guarantee

Paper and Code

Nov 09, 2019

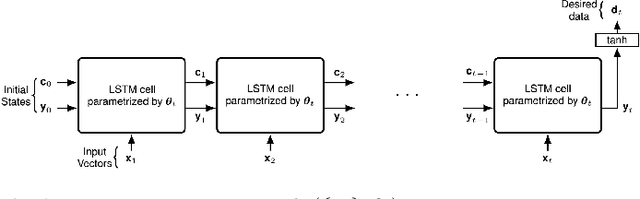

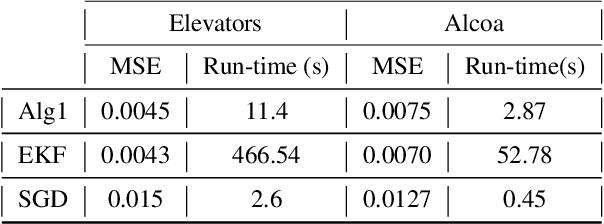

We investigate online nonlinear regression with long short term memory (LSTM) based networks, which we refer to as LSTM-based online learning. For LSTM-based online learning, we introduce a highly efficient extended Kalman filter (EKF) based training algorithm with a theoretical convergence guarantee. Our algorithm is truly online such that it does not make any assumption on the underlying data generating process or the system dynamics of the learning algorithm to guarantee convergence. Through an extensive set of simulations, we illustrate significant performance improvements achieved by our algorithm with respect to the conventional LSTM training methods. We particularly show that our algorithm provides very similar error performance with the EKF learning algorithm in 10-40 times shorter training time depending on the parameter size of the network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge