Long-term Fairness For Real-time Decision Making: A Constrained Online Optimization Approach

Paper and Code

Jan 04, 2024

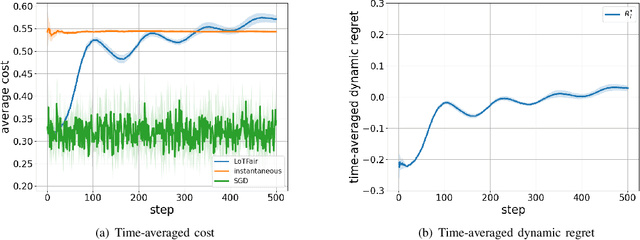

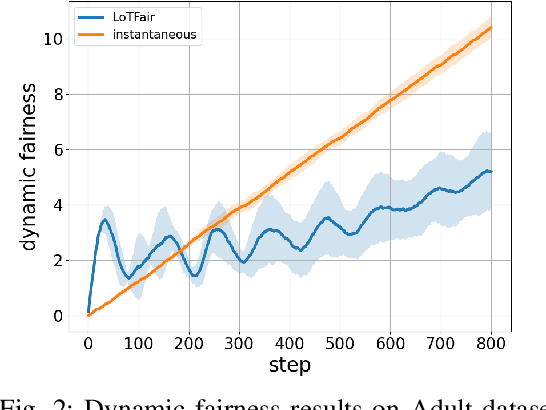

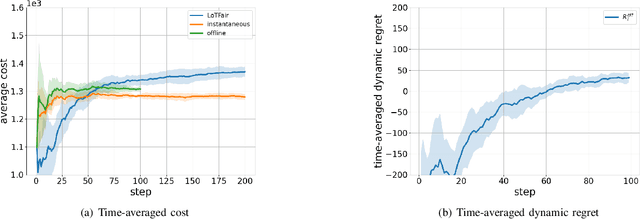

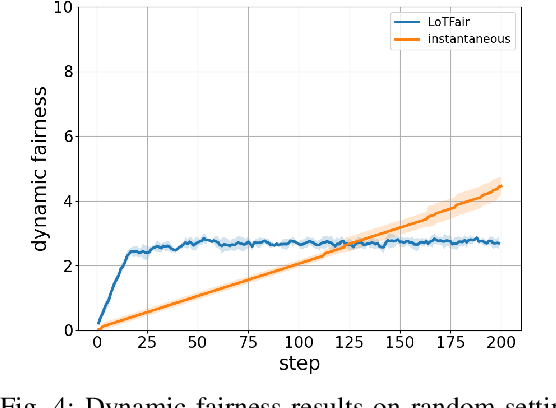

Machine learning (ML) has demonstrated remarkable capabilities across many real-world systems, from predictive modeling to intelligent automation. However, the widespread integration of machine learning also makes it necessary to ensure machine learning-driven decision-making systems do not violate ethical principles and values of society in which they operate. As ML-driven decisions proliferate, particularly in cases involving sensitive attributes such as gender, race, and age, to name a few, the need for equity and impartiality has emerged as a fundamental concern. In situations demanding real-time decision-making, fairness objectives become more nuanced and complex: instantaneous fairness to ensure equity in every time slot, and long-term fairness to ensure fairness over a period of time. There is a growing awareness that real-world systems that operate over long periods and require fairness over different timelines. However, existing approaches mainly address dynamic costs with time-invariant fairness constraints, often disregarding the challenges posed by time-varying fairness constraints. To bridge this gap, this work introduces a framework for ensuring long-term fairness within dynamic decision-making systems characterized by time-varying fairness constraints. We formulate the decision problem with fairness constraints over a period as a constrained online optimization problem. A novel online algorithm, named LoTFair, is presented that solves the problem 'on the fly'. We prove that LoTFair can make overall fairness violations negligible while maintaining the performance over the long run.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge