Long Short-Term Memory Spiking Networks and Their Applications

Paper and Code

Jul 09, 2020

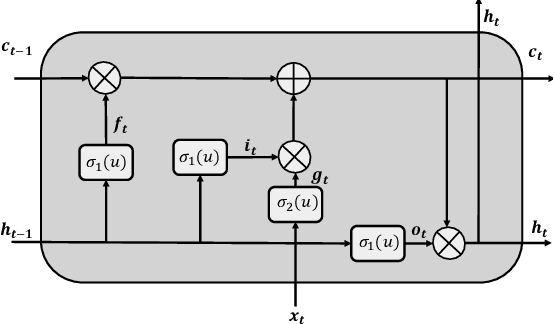

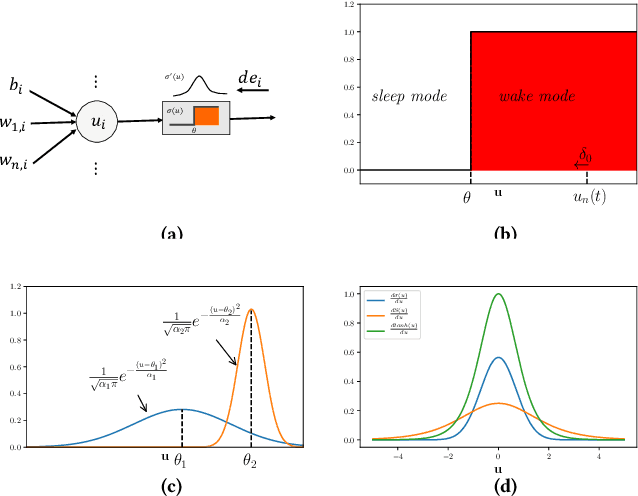

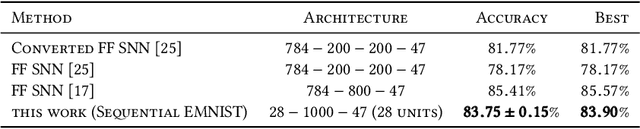

Recent advances in event-based neuromorphic systems have resulted in significant interest in the use and development of spiking neural networks (SNNs). However, the non-differentiable nature of spiking neurons makes SNNs incompatible with conventional backpropagation techniques. In spite of the significant progress made in training conventional deep neural networks (DNNs), training methods for SNNs still remain relatively poorly understood. In this paper, we present a novel framework for training recurrent SNNs. Analogous to the benefits presented by recurrent neural networks (RNNs) in learning time series models within DNNs, we develop SNNs based on long short-term memory (LSTM) networks. We show that LSTM spiking networks learn the timing of the spikes and temporal dependencies. We also develop a methodology for error backpropagation within LSTM-based SNNs. The developed architecture and method for backpropagation within LSTM-based SNNs enable them to learn long-term dependencies with comparable results to conventional LSTMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge