Locally Private $k$-Means Clustering with Constant Multiplicative Approximation and Near-Optimal Additive Error

Paper and Code

May 31, 2021

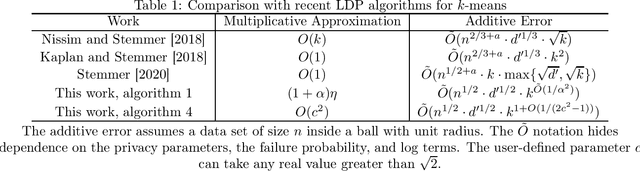

Given a data set of size $n$ in $d'$-dimensional Euclidean space, the $k$-means problem asks for a set of $k$ points (called centers) so that the sum of the $\ell_2^2$-distances between points of a given data set of size $n$ and the set of $k$ centers is minimized. Recent work on this problem in the locally private setting achieves constant multiplicative approximation with additive error $\tilde{O} (n^{1/2 + a} \cdot k \cdot \max \{\sqrt{d}, \sqrt{k} \})$ and proves a lower bound of $\Omega(\sqrt{n})$ on the additive error for any solution with a constant number of rounds. In this work we bridge the gap between the exponents of $n$ in the upper and lower bounds on the additive error with two new algorithms. Given any $\alpha>0$, our first algorithm achieves a multiplicative approximation guarantee which is at most a $(1+\alpha)$ factor greater than that of any non-private $k$-means clustering algorithm with $k^{\tilde{O}(1/\alpha^2)} \sqrt{d' n} \mbox{poly}\log n$ additive error. Given any $c>\sqrt{2}$, our second algorithm achieves $O(k^{1 + \tilde{O}(1/(2c^2-1))} \sqrt{d' n} \mbox{poly} \log n)$ additive error with constant multiplicative approximation. Both algorithms go beyond the $\Omega(n^{1/2 + a})$ factor that occurs in the additive error for arbitrarily small parameters $a$ in previous work, and the second algorithm in particular shows for the first time that it is possible to solve the locally private $k$-means problem in a constant number of rounds with constant factor multiplicative approximation and polynomial dependence on $k$ in the additive error arbitrarily close to linear.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge